mirror of

https://github.com/blakeblackshear/frigate.git

synced 2026-02-20 13:54:36 +01:00

Add API and WebUI to export recordings (#6550)

* Add ability to export frigate clips * Add http endpoint * Add dir to nginx * Add webUI * Formatting * Cleanup unused * Optimize timelapse * Fix pts * Use JSON body for params * Use hwaccel to encode when available * Print ffmpeg command when fail * Print ffmpeg command when fail * Add separate ffmpeg preset for timelapse * Add docs outlining the export directory * Add export docs * Use '' * Fix playlist max time * Lower max playlist time * Add api docs for export * isort fixes

This commit is contained in:

@@ -172,6 +172,27 @@ http {

|

||||

root /media/frigate;

|

||||

}

|

||||

|

||||

location /exports/ {

|

||||

add_header 'Access-Control-Allow-Origin' "$http_origin" always;

|

||||

add_header 'Access-Control-Allow-Credentials' 'true';

|

||||

add_header 'Access-Control-Expose-Headers' 'Content-Length';

|

||||

if ($request_method = 'OPTIONS') {

|

||||

add_header 'Access-Control-Allow-Origin' "$http_origin";

|

||||

add_header 'Access-Control-Max-Age' 1728000;

|

||||

add_header 'Content-Type' 'text/plain charset=UTF-8';

|

||||

add_header 'Content-Length' 0;

|

||||

return 204;

|

||||

}

|

||||

|

||||

types {

|

||||

video/mp4 mp4;

|

||||

}

|

||||

|

||||

autoindex on;

|

||||

autoindex_format json;

|

||||

root /media/frigate;

|

||||

}

|

||||

|

||||

location /ws {

|

||||

proxy_pass http://mqtt_ws/;

|

||||

proxy_http_version 1.1;

|

||||

|

||||

@@ -80,3 +80,7 @@ record:

|

||||

dog: 2

|

||||

car: 7

|

||||

```

|

||||

|

||||

## How do I export recordings?

|

||||

|

||||

The export page in the Frigate WebUI allows for exporting real time clips with a designated start and stop time as well as exporting a timelapse for a designated start and stop time. These exports can take a while so it is important to leave the file until it is no longer in progress.

|

||||

|

||||

@@ -24,6 +24,7 @@ Frigate uses the following locations for read/write operations in the container.

|

||||

- `/config`: Used to store the Frigate config file and sqlite database. You will also see a few files alongside the database file while Frigate is running.

|

||||

- `/media/frigate/clips`: Used for snapshot storage. In the future, it will likely be renamed from `clips` to `snapshots`. The file structure here cannot be modified and isn't intended to be browsed or managed manually.

|

||||

- `/media/frigate/recordings`: Internal system storage for recording segments. The file structure here cannot be modified and isn't intended to be browsed or managed manually.

|

||||

- `/media/frigate/exports`: Storage for clips and timelapses that have been exported via the WebUI or API.

|

||||

- `/tmp/cache`: Cache location for recording segments. Initial recordings are written here before being checked and converted to mp4 and moved to the recordings folder.

|

||||

- `/dev/shm`: It is not recommended to modify this directory or map it with docker. This is the location for raw decoded frames in shared memory and it's size is impacted by the `shm-size` calculations below.

|

||||

|

||||

@@ -221,7 +222,7 @@ These settings were tested on DSM 7.1.1-42962 Update 4

|

||||

|

||||

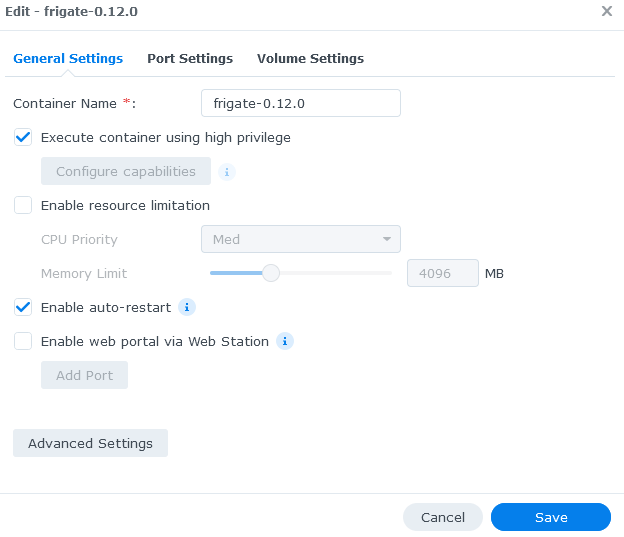

The `Execute container using high privilege` option needs to be enabled in order to give the frigate container the elevated privileges it may need.

|

||||

|

||||

The `Enable auto-restart` option can be enabled if you want the container to automatically restart whenever it improperly shuts down due to an error.

|

||||

The `Enable auto-restart` option can be enabled if you want the container to automatically restart whenever it improperly shuts down due to an error.

|

||||

|

||||

|

||||

|

||||

|

||||

@@ -271,6 +271,20 @@ HTTP Live Streaming Video on Demand URL for the specified event. Can be viewed i

|

||||

|

||||

HTTP Live Streaming Video on Demand URL for the camera with the specified time range. Can be viewed in an application like VLC.

|

||||

|

||||

### `POST /api/export/<camera>/start/<start-timestamp>/end/<end-timestamp>`

|

||||

|

||||

Export recordings from `start-timestamp` to `end-timestamp` for `camera` as a single mp4 file. These recordings will be exported to the `/media/frigate/exports` folder.

|

||||

|

||||

It is also possible to export this recording as a timelapse.

|

||||

|

||||

**Optional Body:**

|

||||

|

||||

```json

|

||||

{

|

||||

"playback": "realtime", // playback factor: realtime or timelapse_25x

|

||||

}

|

||||

```

|

||||

|

||||

### `GET /api/<camera_name>/recordings/summary`

|

||||

|

||||

Hourly summary of recordings data for a camera.

|

||||

|

||||

@@ -24,6 +24,7 @@ from frigate.const import (

|

||||

CLIPS_DIR,

|

||||

CONFIG_DIR,

|

||||

DEFAULT_DB_PATH,

|

||||

EXPORT_DIR,

|

||||

MODEL_CACHE_DIR,

|

||||

RECORD_DIR,

|

||||

)

|

||||

@@ -68,7 +69,14 @@ class FrigateApp:

|

||||

os.environ[key] = value

|

||||

|

||||

def ensure_dirs(self) -> None:

|

||||

for d in [CONFIG_DIR, RECORD_DIR, CLIPS_DIR, CACHE_DIR, MODEL_CACHE_DIR]:

|

||||

for d in [

|

||||

CONFIG_DIR,

|

||||

RECORD_DIR,

|

||||

CLIPS_DIR,

|

||||

CACHE_DIR,

|

||||

MODEL_CACHE_DIR,

|

||||

EXPORT_DIR,

|

||||

]:

|

||||

if not os.path.exists(d) and not os.path.islink(d):

|

||||

logger.info(f"Creating directory: {d}")

|

||||

os.makedirs(d)

|

||||

|

||||

@@ -4,6 +4,7 @@ MODEL_CACHE_DIR = f"{CONFIG_DIR}/model_cache"

|

||||

BASE_DIR = "/media/frigate"

|

||||

CLIPS_DIR = f"{BASE_DIR}/clips"

|

||||

RECORD_DIR = f"{BASE_DIR}/recordings"

|

||||

EXPORT_DIR = f"{BASE_DIR}/exports"

|

||||

BIRDSEYE_PIPE = "/tmp/cache/birdseye"

|

||||

CACHE_DIR = "/tmp/cache"

|

||||

YAML_EXT = (".yaml", ".yml")

|

||||

@@ -28,3 +29,4 @@ DRIVER_INTEL_iHD = "iHD"

|

||||

|

||||

MAX_SEGMENT_DURATION = 600

|

||||

SECONDS_IN_DAY = 60 * 60 * 24

|

||||

MAX_PLAYLIST_SECONDS = 7200 # support 2 hour segments for a single playlist to account for cameras with inconsistent segment times

|

||||

|

||||

@@ -2,6 +2,7 @@

|

||||

|

||||

import logging

|

||||

import os

|

||||

from enum import Enum

|

||||

from typing import Any

|

||||

|

||||

from frigate.const import BTBN_PATH

|

||||

@@ -116,7 +117,7 @@ PRESETS_HW_ACCEL_SCALE = {

|

||||

"default": "-r {0} -s {1}x{2}",

|

||||

}

|

||||

|

||||

PRESETS_HW_ACCEL_ENCODE = {

|

||||

PRESETS_HW_ACCEL_ENCODE_BIRDSEYE = {

|

||||

"preset-rpi-32-h264": "ffmpeg -hide_banner {0} -c:v h264_v4l2m2m {1}",

|

||||

"preset-rpi-64-h264": "ffmpeg -hide_banner {0} -c:v h264_v4l2m2m {1}",

|

||||

"preset-vaapi": "ffmpeg -hide_banner -hwaccel vaapi -hwaccel_output_format vaapi -hwaccel_device {2} {0} -c:v h264_vaapi -g 50 -bf 0 -profile:v high -level:v 4.1 -sei:v 0 -an -vf format=vaapi|nv12,hwupload {1}",

|

||||

@@ -127,6 +128,17 @@ PRESETS_HW_ACCEL_ENCODE = {

|

||||

"default": "ffmpeg -hide_banner {0} -c:v libx264 -g 50 -profile:v high -level:v 4.1 -preset:v superfast -tune:v zerolatency {1}",

|

||||

}

|

||||

|

||||

PRESETS_HW_ACCEL_ENCODE_TIMELAPSE = {

|

||||

"preset-rpi-32-h264": "ffmpeg -hide_banner {0} -c:v h264_v4l2m2m {1}",

|

||||

"preset-rpi-64-h264": "ffmpeg -hide_banner {0} -c:v h264_v4l2m2m {1}",

|

||||

"preset-vaapi": "ffmpeg -hide_banner -hwaccel vaapi -hwaccel_output_format vaapi -hwaccel_device {2} {0} -c:v h264_vaapi {1}",

|

||||

"preset-intel-qsv-h264": "ffmpeg -hide_banner {0} -c:v h264_qsv -g 50 -bf 0 -profile:v high -level:v 4.1 -async_depth:v 1 {1}",

|

||||

"preset-intel-qsv-h265": "ffmpeg -hide_banner {0} -c:v hevc_qsv -g 50 -bf 0 -profile:v high -level:v 4.1 -async_depth:v 1 {1}",

|

||||

"preset-nvidia-h264": "ffmpeg -hide_banner -hwaccel cuda -hwaccel_output_format cuda -extra_hw_frames 8 {0} -c:v h264_nvenc {1}",

|

||||

"preset-nvidia-h265": "ffmpeg -hide_banner -hwaccel cuda -hwaccel_output_format cuda -extra_hw_frames 8 {0} -c:v hevc_nvenc {1}",

|

||||

"default": "ffmpeg -hide_banner {0} -c:v libx264 -preset:v ultrafast -tune:v zerolatency {1}",

|

||||

}

|

||||

|

||||

|

||||

def parse_preset_hardware_acceleration_decode(arg: Any) -> list[str]:

|

||||

"""Return the correct preset if in preset format otherwise return None."""

|

||||

@@ -161,12 +173,24 @@ def parse_preset_hardware_acceleration_scale(

|

||||

return scale

|

||||

|

||||

|

||||

def parse_preset_hardware_acceleration_encode(arg: Any, input: str, output: str) -> str:

|

||||

"""Return the correct scaling preset or default preset if none is set."""

|

||||

if not isinstance(arg, str):

|

||||

return PRESETS_HW_ACCEL_ENCODE["default"].format(input, output)

|

||||

class EncodeTypeEnum(str, Enum):

|

||||

birdseye = "birdseye"

|

||||

timelapse = "timelapse"

|

||||

|

||||

return PRESETS_HW_ACCEL_ENCODE.get(arg, PRESETS_HW_ACCEL_ENCODE["default"]).format(

|

||||

|

||||

def parse_preset_hardware_acceleration_encode(

|

||||

arg: Any, input: str, output: str, type: EncodeTypeEnum = EncodeTypeEnum.birdseye

|

||||

) -> str:

|

||||

"""Return the correct scaling preset or default preset if none is set."""

|

||||

if type == EncodeTypeEnum.birdseye:

|

||||

arg_map = PRESETS_HW_ACCEL_ENCODE_BIRDSEYE

|

||||

elif type == EncodeTypeEnum.timelapse:

|

||||

arg_map = PRESETS_HW_ACCEL_ENCODE_TIMELAPSE

|

||||

|

||||

if not isinstance(arg, str):

|

||||

return arg_map["default"].format(input, output)

|

||||

|

||||

return arg_map.get(arg, arg_map["default"]).format(

|

||||

input,

|

||||

output,

|

||||

_gpu_selector.get_selected_gpu(),

|

||||

|

||||

@@ -35,6 +35,7 @@ from frigate.models import Event, Recordings, Timeline

|

||||

from frigate.object_processing import TrackedObject

|

||||

from frigate.plus import PlusApi

|

||||

from frigate.ptz import OnvifController

|

||||

from frigate.record.export import PlaybackFactorEnum, RecordingExporter

|

||||

from frigate.stats import stats_snapshot

|

||||

from frigate.storage import StorageMaintainer

|

||||

from frigate.util import (

|

||||

@@ -1504,6 +1505,22 @@ def vod_event(id):

|

||||

)

|

||||

|

||||

|

||||

@bp.route("/export/<camera_name>/start/<start_time>/end/<end_time>", methods=["POST"])

|

||||

def export_recording(camera_name: str, start_time: int, end_time: int):

|

||||

playback_factor = request.get_json(silent=True).get("playback", "realtime")

|

||||

exporter = RecordingExporter(

|

||||

current_app.frigate_config,

|

||||

camera_name,

|

||||

int(start_time),

|

||||

int(end_time),

|

||||

PlaybackFactorEnum[playback_factor]

|

||||

if playback_factor in PlaybackFactorEnum.__members__.values()

|

||||

else PlaybackFactorEnum.realtime,

|

||||

)

|

||||

exporter.start()

|

||||

return "Starting export of recording", 200

|

||||

|

||||

|

||||

def imagestream(detected_frames_processor, camera_name, fps, height, draw_options):

|

||||

while True:

|

||||

# max out at specified FPS

|

||||

|

||||

101

frigate/record/export.py

Normal file

101

frigate/record/export.py

Normal file

@@ -0,0 +1,101 @@

|

||||

"""Export recordings to storage."""

|

||||

|

||||

import datetime

|

||||

import logging

|

||||

import os

|

||||

import subprocess as sp

|

||||

import threading

|

||||

from enum import Enum

|

||||

|

||||

from frigate.config import FrigateConfig

|

||||

from frigate.const import EXPORT_DIR, MAX_PLAYLIST_SECONDS

|

||||

from frigate.ffmpeg_presets import (

|

||||

EncodeTypeEnum,

|

||||

parse_preset_hardware_acceleration_encode,

|

||||

)

|

||||

|

||||

logger = logging.getLogger(__name__)

|

||||

|

||||

|

||||

class PlaybackFactorEnum(str, Enum):

|

||||

realtime = "realtime"

|

||||

timelapse_25x = "timelapse_25x"

|

||||

|

||||

|

||||

class RecordingExporter(threading.Thread):

|

||||

"""Exports a specific set of recordings for a camera to storage as a single file."""

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

config: FrigateConfig,

|

||||

camera: str,

|

||||

start_time: int,

|

||||

end_time: int,

|

||||

playback_factor: PlaybackFactorEnum,

|

||||

) -> None:

|

||||

threading.Thread.__init__(self)

|

||||

self.config = config

|

||||

self.camera = camera

|

||||

self.start_time = start_time

|

||||

self.end_time = end_time

|

||||

self.playback_factor = playback_factor

|

||||

|

||||

def get_datetime_from_timestamp(self, timestamp: int) -> str:

|

||||

"""Convenience fun to get a simple date time from timestamp."""

|

||||

return datetime.datetime.fromtimestamp(timestamp).strftime("%Y_%m_%d_%I:%M")

|

||||

|

||||

def run(self) -> None:

|

||||

logger.debug(

|

||||

f"Beginning export for {self.camera} from {self.start_time} to {self.end_time}"

|

||||

)

|

||||

file_name = f"{EXPORT_DIR}/in_progress.{self.camera}@{self.get_datetime_from_timestamp(self.start_time)}__{self.get_datetime_from_timestamp(self.end_time)}.mp4"

|

||||

final_file_name = f"{EXPORT_DIR}/{self.camera}_{self.get_datetime_from_timestamp(self.start_time)}__{self.get_datetime_from_timestamp(self.end_time)}.mp4"

|

||||

|

||||

if (self.end_time - self.start_time) <= MAX_PLAYLIST_SECONDS:

|

||||

playlist_lines = f"http://127.0.0.1:5000/vod/{self.camera}/start/{self.start_time}/end/{self.end_time}/index.m3u8"

|

||||

ffmpeg_input = (

|

||||

f"-y -protocol_whitelist pipe,file,http,tcp -i {playlist_lines}"

|

||||

)

|

||||

else:

|

||||

playlist_lines = []

|

||||

playlist_start = self.start_time

|

||||

|

||||

while playlist_start < self.end_time:

|

||||

playlist_lines.append(

|

||||

f"file 'http://127.0.0.1:5000/vod/{self.camera}/start/{playlist_start}/end/{min(playlist_start + MAX_PLAYLIST_SECONDS, self.end_time)}/index.m3u8'"

|

||||

)

|

||||

playlist_start += MAX_PLAYLIST_SECONDS

|

||||

|

||||

ffmpeg_input = "-y -protocol_whitelist pipe,file,http,tcp -f concat -safe 0 -i /dev/stdin"

|

||||

|

||||

if self.playback_factor == PlaybackFactorEnum.realtime:

|

||||

ffmpeg_cmd = (

|

||||

f"ffmpeg -hide_banner {ffmpeg_input} -c copy {file_name}"

|

||||

).split(" ")

|

||||

elif self.playback_factor == PlaybackFactorEnum.timelapse_25x:

|

||||

ffmpeg_cmd = (

|

||||

parse_preset_hardware_acceleration_encode(

|

||||

self.config.ffmpeg.hwaccel_args,

|

||||

ffmpeg_input,

|

||||

f"-vf setpts=0.04*PTS -r 30 -an {file_name}",

|

||||

EncodeTypeEnum.timelapse,

|

||||

)

|

||||

).split(" ")

|

||||

|

||||

p = sp.run(

|

||||

ffmpeg_cmd,

|

||||

input="\n".join(playlist_lines),

|

||||

encoding="ascii",

|

||||

capture_output=True,

|

||||

)

|

||||

|

||||

if p.returncode != 0:

|

||||

logger.error(

|

||||

f"Failed to export recording for command {' '.join(ffmpeg_cmd)}"

|

||||

)

|

||||

logger.error(p.stderr)

|

||||

return

|

||||

|

||||

logger.debug(f"Updating finalized export {file_name}")

|

||||

os.rename(file_name, final_file_name)

|

||||

logger.debug(f"Finished exporting {file_name}")

|

||||

@@ -44,6 +44,7 @@ export default function Sidebar() {

|

||||

</Match>

|

||||

{birdseye?.enabled ? <Destination href="/birdseye" text="Birdseye" /> : null}

|

||||

<Destination href="/events" text="Events" />

|

||||

<Destination href="/exports" text="Exports" />

|

||||

<Separator />

|

||||

<Destination href="/storage" text="Storage" />

|

||||

<Destination href="/system" text="System" />

|

||||

|

||||

@@ -31,6 +31,7 @@ export default function App() {

|

||||

<AsyncRoute path="/cameras/:camera" getComponent={cameraComponent} />

|

||||

<AsyncRoute path="/birdseye" getComponent={Routes.getBirdseye} />

|

||||

<AsyncRoute path="/events" getComponent={Routes.getEvents} />

|

||||

<AsyncRoute path="/exports" getComponent={Routes.getExports} />

|

||||

<AsyncRoute

|

||||

path="/recording/:camera/:date?/:hour?/:minute?/:second?"

|

||||

getComponent={Routes.getRecording}

|

||||

|

||||

83

web/src/routes/Export.jsx

Normal file

83

web/src/routes/Export.jsx

Normal file

@@ -0,0 +1,83 @@

|

||||

import { h } from 'preact';

|

||||

import Heading from '../components/Heading';

|

||||

import { useState } from 'preact/hooks';

|

||||

import useSWR from 'swr';

|

||||

import Button from '../components/Button';

|

||||

import axios from 'axios';

|

||||

|

||||

export default function Export() {

|

||||

const { data: config } = useSWR('config');

|

||||

|

||||

const [camera, setCamera] = useState('select');

|

||||

const [playback, setPlayback] = useState('select');

|

||||

const [message, setMessage] = useState({ text: '', error: false });

|

||||

|

||||

const onHandleExport = () => {

|

||||

if (camera == 'select') {

|

||||

setMessage({ text: 'A camera needs to be selected.', error: true });

|

||||

return;

|

||||

}

|

||||

|

||||

if (playback == 'select') {

|

||||

setMessage({ text: 'A playback factor needs to be selected.', error: true });

|

||||

return;

|

||||

}

|

||||

|

||||

const start = new Date(document.getElementById('start').value).getTime() / 1000;

|

||||

const end = new Date(document.getElementById('end').value).getTime() / 1000;

|

||||

|

||||

if (!start || !end) {

|

||||

setMessage({ text: 'A start and end time needs to be selected', error: true });

|

||||

return;

|

||||

}

|

||||

|

||||

setMessage({ text: 'Successfully started export. View the file in the /exports folder.', error: false });

|

||||

axios.post(`export/${camera}/start/${start}/end/${end}`, { playback });

|

||||

};

|

||||

|

||||

return (

|

||||

<div className="space-y-4 p-2 px-4 w-full">

|

||||

<Heading>Export</Heading>

|

||||

|

||||

{message.text && (

|

||||

<div className={`max-h-20 ${message.error ? 'text-red-500' : 'text-green-500'}`}>{message.text}</div>

|

||||

)}

|

||||

|

||||

<div>

|

||||

<select

|

||||

className="me-2 cursor-pointer rounded dark:bg-slate-800"

|

||||

value={camera}

|

||||

onChange={(e) => setCamera(e.target.value)}

|

||||

>

|

||||

<option value="select">Select A Camera</option>

|

||||

{Object.keys(config?.cameras || {}).map((item) => (

|

||||

<option key={item} value={item}>

|

||||

{item.replaceAll('_', ' ')}

|

||||

</option>

|

||||

))}

|

||||

</select>

|

||||

<select

|

||||

className="ms-2 cursor-pointer rounded dark:bg-slate-800"

|

||||

value={playback}

|

||||

onChange={(e) => setPlayback(e.target.value)}

|

||||

>

|

||||

<option value="select">Select A Playback Factor</option>

|

||||

<option value="realtime">Realtime</option>

|

||||

<option value="timelapse_25x">Timelapse</option>

|

||||

</select>

|

||||

</div>

|

||||

|

||||

<div>

|

||||

<Heading className="py-2" size="sm">

|

||||

From:

|

||||

</Heading>

|

||||

<input className="dark:bg-slate-800" id="start" type="datetime-local" />

|

||||

<Heading className="py-2" size="sm">

|

||||

To:

|

||||

</Heading>

|

||||

<input className="dark:bg-slate-800" id="end" type="datetime-local" />

|

||||

</div>

|

||||

<Button onClick={() => onHandleExport()}>Submit</Button>

|

||||

</div>

|

||||

);

|

||||

}

|

||||

@@ -23,6 +23,11 @@ export async function getEvents(_url, _cb, _props) {

|

||||

return module.default;

|

||||

}

|

||||

|

||||

export async function getExports(_url, _cb, _props) {

|

||||

const module = await import('./Export.jsx');

|

||||

return module.default;

|

||||

}

|

||||

|

||||

export async function getRecording(_url, _cb, _props) {

|

||||

const module = await import('./Recording.jsx');

|

||||

return module.default;

|

||||

|

||||

Reference in New Issue

Block a user