Previously people were able to send random data to feature type. Now it

is validated.

Fixes https://github.com/Unleash/unleash/issues/7751

---------

Co-authored-by: Thomas Heartman <thomas@getunleash.io>

Changed the url of event search to search/events to align with

search/features. With that created a search controller to keep all

searches under there.

Added first test.

This PR adds Grafana gauges for all the existing resource limits. The

primary purpose is to be able to use this in alerting. Secondarily, we

can also use it to get better insights into how many customers have

increased their limits, as well as how many people are approaching their

limit, regdardless of whether it's been increased or not.

## Discussion points

### Implementation

The first approach I took (in

87528b4c67),

was to add a new gauge for each resource limit. However, there's a lot

of boilerplate for it.

I thought doing it like this (the current implementation) would make it

easier. We should still be able to use the labelName to collate this in

Grafana, as far as I understand? As a bonus, we'd automatically get new

resource limits when we add them to the schema.

``` tsx

const resourceLimit = createGauge({

name: 'resource_limit',

help: 'The maximum number of resources allowed.',

labelNames: ['resource'],

});

// ...

for (const [resource, limit] of Object.entries(config.resourceLimits)) {

resourceLimit.labels({ resource }).set(limit);

}

```

That way, when checking the stats, we should be able to do something

like this:

``` promql

resource_limit{resource="constraintValues"}

```

### Do we need to reset gauges?

I noticed that we reset gauges before setting values in them all over

the place. I don't know if that's necessary. I'd like to get that double

clarified before merging this.

https://linear.app/unleash/issue/2-2501/adapt-origin-middleware-to-stop-logging-ui-requests-and-start

This adapts the new origin middleware to stop logging UI requests (too

noisy) and adds new Prometheus metrics.

<img width="745" alt="image"

src="https://github.com/user-attachments/assets/d0c7f51d-feb6-4ff5-b856-77661be3b5a9">

This should allow us to better analyze this data. If we see a lot of API

requests, we can dive into the logs for that instance and check the

logged data, like the user agent.

This PR adds some helper methods to make listening and emitting metric

events more strict in terms of types. I think it's a positive change

aligned with our scouting principle, but if you think it's complex and

does not belong here I'm happy with dropping it.

Add ability to format format event as Markdown in generic webhooks,

similar to Datadog integration.

Closes https://github.com/Unleash/unleash/issues/7646

Co-authored-by: Nuno Góis <github@nunogois.com>

https://linear.app/unleash/issue/2-2469/keep-the-latest-event-for-each-integration-configuration

This makes it so we keep the latest event for each integration

configuration, along with the previous logic of keeping the latest 100

events of the last 2 hours.

This should be a cheap nice-to-have, since now we can always know what

the latest integration event looked like for each integration

configuration. This will tie-in nicely with the next task of making the

latest integration event state visible in the integration card.

Also improved the clarity of the auto-deletion explanation in the modal.

This PR adds the UI part of feature flag collaborators. Collaborators are hidden on windows smaller than size XL because we're not sure how to deal with them in those cases yet.

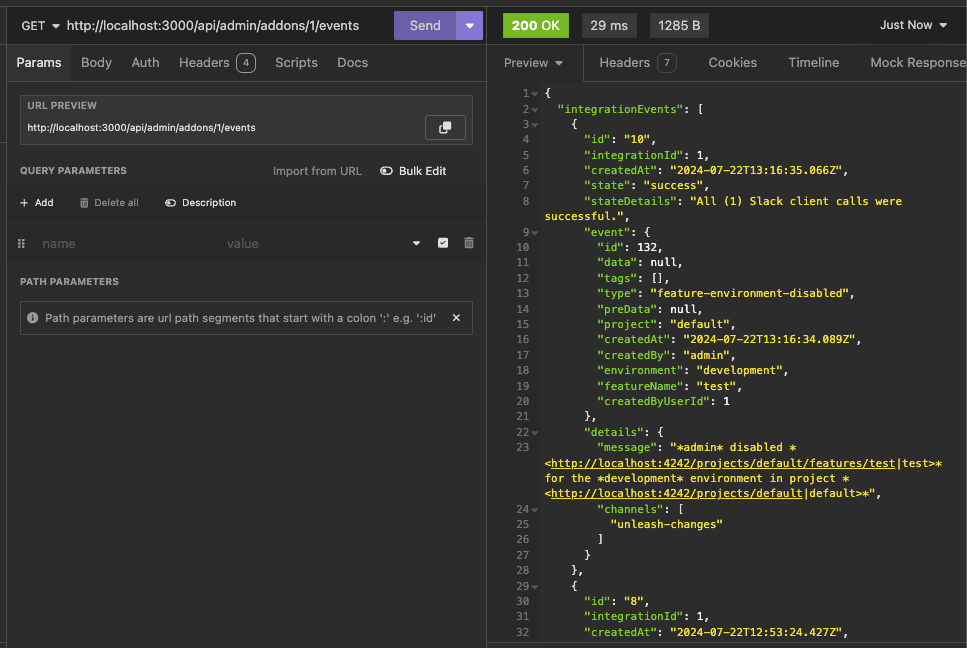

https://linear.app/unleash/issue/2-2439/create-new-integration-events-endpointhttps://linear.app/unleash/issue/2-2436/create-new-integration-event-openapi-schemas

This adds a new `/events` endpoint to the Addons API, allowing us to

fetch integration events for a specific integration configuration id.

Also includes:

- `IntegrationEventsSchema`: New schema to represent the response object

of the list of integration events;

- `yarn schema:update`: New `package.json` script to update the OpenAPI

spec file;

- `BasePaginationParameters`: This is copied from Enterprise. After

merging this we should be able to refactor Enterprise to use this one

instead of the one it has, so we don't repeat ourselves;

We're also now correctly representing the BIGSERIAL as BigInt (string +

pattern) in our OpenAPI schema. Otherwise our validation would complain,

since we're saying it's a number in the schema but in fact returning a

string.

This PR allows you to gradually lower constraint values, even if they're

above the limits.

It does, however, come with a few caveats because of how Unleash deals

with constraints:

Constraints are just json blobs. They have no IDs or other

distinguishing features. Because of this, we can't compare the current

and previous state of a specific constraint.

What we can do instead, is to allow you to lower the amount of

constraint values if and only if the number of constraints hasn't

changed. In this case, we assume that you also haven't reordered the

constraints (not possible from the UI today). That way, we can compare

constraint values between updated and existing constraints based on

their index in the constraint list.

It's not foolproof, but it's a workaround that you can use. There's a

few edge cases that pop up, but that I don't think it's worth trying to

cover:

Case: If you **both** have too many constraints **and** too many

constraint values

Result: You won't be allowed to lower the amount of constraints as long

as the amount of strategy values is still above the limit.

Workaround: First, lower the amount of constraint values until you're

under the limit and then lower constraints. OR, set the constraint you

want to delete to a constraint that is trivially true (e.g. `currentTime

> yesterday` ). That will essentially take that constraint out of the

equation, achieving the same end result.

Case: You re-order constraints and at least one of them has too many

values

Result: You won't be allowed to (except for in the edge case where the

one with too many values doesn't move or switches places with another

one with the exact same amount of values).

Workaround: We don't need one. The order of constraints has no effect on

the evaluation.

https://linear.app/unleash/issue/2-2450/register-integration-events-webhook

Registers integration events in the **Webhook** integration.

Even though this touches a lot of files, most of it is preparation for

the next steps. The only actual implementation of registering

integration events is in the **Webhook** integration. The rest will

follow on separate PRs.

Here's an example of how this looks like in the database table:

```json

{

"id": 7,

"integration_id": 2,

"created_at": "2024-07-18T18:11:11.376348+01:00",

"state": "failed",

"state_details": "Webhook request failed with status code: ECONNREFUSED",

"event": {

"id": 130,

"data": null,

"tags": [],

"type": "feature-environment-enabled",

"preData": null,

"project": "default",

"createdAt": "2024-07-18T17:11:10.821Z",

"createdBy": "admin",

"environment": "development",

"featureName": "test",

"createdByUserId": 1

},

"details": {

"url": "http://localhost:1337",

"body": "{ \"id\": 130, \"type\": \"feature-environment-enabled\", \"createdBy\": \"admin\", \"createdAt\": \"2024-07-18T17: 11: 10.821Z\", \"createdByUserId\": 1, \"data\": null, \"preData\": null, \"tags\": [], \"featureName\": \"test\", \"project\": \"default\", \"environment\": \"development\" }"

}

}

```

This PR updates the limit validation for constraint numbers on a single

strategy. In cases where you're already above the limit, it allows you

to still update the strategy as long as you don't add any **new**

constraints (that is: the number of constraints doesn't increase).

A discussion point: I've only tested this with unit tests of the method

directly. I haven't tested that the right parameters are passed in from

calling functions. The main reason being that that would involve

updating the fake strategy and feature stores to sync their flag lists

(or just checking that the thrown error isn't a limit exceeded error),

because right now the fake strategy store throws an error when it

doesn't find the flag I want to update.

https://linear.app/unleash/issue/2-2453/validate-patched-data-against-schema

This adds schema validation to patched data, fixing potential issues of

patching data to an invalid state.

This can be easily reproduced by patching a strategy constraints to be

an object (invalid), instead of an array (valid):

```sh

curl -X 'PATCH' \

'http://localhost:4242/api/admin/projects/default/features/test/environments/development/strategies/8cb3fec6-c40a-45f7-8be0-138c5aaa5263' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '[

{

"path": "/constraints",

"op": "replace",

"from": "/constraints",

"value": {}

}

]'

```

Unleash will accept this because there's no validation that the patched

data actually looks like a proper strategy, and we'll start seeing

Unleash errors due to the invalid state.

This PR adapts some of our existing logic in the way we handle

validation errors to support any dynamic object. This way we can perform

schema validation with any object and still get the benefits of our

existing validation error handling.

This PR also takes the liberty to expose the full instancePath as

propertyName, instead of only the path's last section. We believe this

has more upsides than downsides, especially now that we support the

validation of any type of object.

This PR adds prometheus metrics for when users attempt to exceed the

limits for a given resource.

The implementation sets up a second function exported from the

ExceedsLimitError file that records metrics and then throws the error.

This could also be a static method on the class, but I'm not sure that'd

be better.

This PR updates the OpenAPI error converter to also work for errors with

query parameters.

We previously only sent the body of the request along with the error,

which meant that query parameter errors would show up incorrectly.

For instance given a query param with the date format and the invalid

value `01-2020-01`, you'd previously get the message:

> The `from` value must match format "date". You sent undefined

With this change, you'll get this instead:

> The `from` value must match format "date". You sent "01-2020-01".

The important changes here are two things:

- passing both request body and query params

- the 3 lines in `fromOpenApiValidationError` that check where we should

get the value you sent from.

The rest of it is primarily updating tests to send the right arguments

and some slight rewording to more accurately reflect that this can be

either request body or query params.

https://linear.app/unleash/issue/2-2435/create-migration-for-a-new-integration-events-table

Adds a DB migration that creates the `integration_events` table:

- `id`: Auto-incrementing primary key;

- `integration_id`: The id of the respective integration (i.e.

integration configuration);

- `created_at`: Date of insertion;

- `state`: Integration event state, as text. Can be anything we'd like,

but I'm thinking this will be something like:

- Success ✅

- Failed ❌

- SuccessWithErrors ⚠️

- `state_details`: Expands on the previous column with more details, as

text. Examples:

- OK. Status code: 200

- Status code: 429 - Rate limit reached

- No access token provided

- `event`: The whole event object, stored as a JSON blob;

- `details`: JSON blob with details about the integration execution.

Will depend on the integration itself, but for example:

- Webhook: Request body

- Slack App: Message text and an array with all the channels we're

posting to

I think this gives us enough flexibility to cover all present and

(possibly) future integrations, but I'd like to hear your thoughts.

I'm also really torn on what to call this table:

- `integration_events`: Consistent with the feature name. Addons are now

called integrations, so this would be consistent with the new thing;

- `addon_events`: Consistent with the existing `addons` table.

Our CSP reports that unsafe-inline is not recommended for styleSrc. This

PR adds a flag for making it possible to remove this element of our CSP

headers. It should allow us to see what (if anything) breaks hard.

We'll store hashes for the last 5 passwords, fetch them all for the user

wanting to change their password, and make sure the password does not

verify against any of the 5 stored hashes.

Includes some password-related UI/UX improvements and refactors. Also

some fixes related to reset password rate limiting (instead of an

unhandled exception), and token expiration on error.

---------

Co-authored-by: Nuno Góis <github@nunogois.com>