## About the changes

Some automation may keep some data up-to-date (e.g. segments). These

updates sometimes don't generate changes but we're still storing these

events in the event log and triggering reactions to those events.

Arguably, this could be done in each service domain logic, but it seems

to be a pretty straightforward solution: if preData and data are

provided, it means some change happened. Other events that don't have

preData or don't have data are treated as before.

Tests were added to validate we don't break other events.

When project is moved, then Unleash creates only one event, which is for

target project.

We also need one for source project, to know that project was moved out

of it.

Test will be in enterprise repo.

This fixes a problem where the yggdrasil-engine does not send the

correct value for bucket.start. In practice clients sends metrics every

60s and it does not matter if we use start or the stop timestamp to

resolve the nearest full hour.

Currently, in enterprise we're struggling with setting service and

transactionality; all our verfications says that the setting key is not

present, so setting-store happily tries to insert a new row with a new

PK. However, somehow at the same time, the key already exists. This

commit adds conflict handling to the insertNewRow.

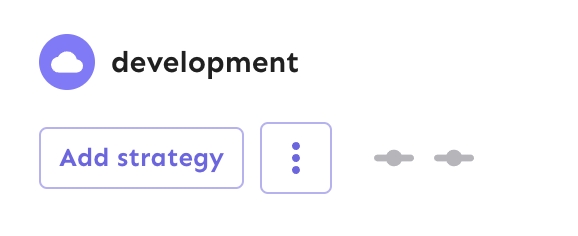

Fixes the height discrepancy between add strategy and more strategies

buttons, both with and without the flag enabled.

The essence of the fix is to make the "more strategies" button's height

dynamic and grow to match the height of the other button.

Before (flag enabled):

After (flag enabled):

Before (flag disabled):

After (flag disabled):

As a bonus: also enables the ui font redesign flag for server-dev.

If you're very sharp-eyed, you might notice a few things:

1. There's more padding on the new button. This was done in concert with

UX when we noticed there was more padding on other buttons. So as a

result, we set the button type to the default instead of "small".

1. The kebab button isn't perfectly square with the flag on. There's a

few issues here, but essentially: to use `aspect-ratio: 1`, you need

either a height or a width set. Because we want everything here to be

auto-generated (use the button's intrinsic height), I couldn't make it

work. In the end, I think this is close enough. If you have other ideas,

you're very welcome to try and fix it.

## About the changes

Based on the first hypothesis from

https://github.com/Unleash/unleash/pull/9264, I decided to find an

alternative way of initializing the DB, mainly trying to run migrations

only once and removing that from the actual test run.

I found in [Postgres template

databases](https://www.postgresql.org/docs/current/manage-ag-templatedbs.html)

an interesting option in combination with jest global initializer.

### Changes on how we use DBs for testing

Previously, we were relying on a single DB with multiple schemas to

isolate tests, but each schema was empty and required migrations or

custom DB initialization scripts.

With this method, we don't need to use different schema names

(apparently there's no templating for schemas), and we can use new

databases. We can also eliminate custom initialization code.

### Legacy tests

This method also highlighted some wrong assumptions in existing tests.

One example is the existence of `default` environment, that because of

being deprecated is no longer available, but because tests are creating

the expected db state manually, they were not updated to match the

existing db state.

To keep tests running green, I've added a configuration to use the

`legacy` test setup (24 tests). By migrating these, we'll speed up

tests, but the code of these tests has to be modified, so I leave this

for another PR.

## Downsides

1. The template db initialization happens at the beginning of any test,

so local development may suffer from slower unit tests. As a workaround

we could define an environment variable to disable the db migration

2. Proliferation of test dbs. In ephemeral environments, this is not a

problem, but for local development we should clean up from time to time.

There's the possibility of cleaning up test dbs using the db name as a

pattern:

2ed2e1c274/scripts/jest-setup.ts (L13-L18)

but I didn't want to add this code yet. Opinions?

## Benefits

1. It allows us migrate only once and still get the benefits of having a

well known state for tests.

3. It removes some of the custom setup for tests (which in some cases

ends up testing something not realistic)

4. It removes the need of testing migrations:

https://github.com/Unleash/unleash/blob/main/src/test/e2e/migrator.e2e.test.ts

as migrations are run at the start

5. Forces us to keep old tests up to date when we modify our database

Adds a killswitch called "filterExistingFlagNames". When enabled it will

filter out reported SDK metrics and remove all reported metrics for

names that does not match an exiting feature flag in Unleash.

This have proven critical in the rare case of an SDK that start sending

random flag-names back to unleash, and thus filling up the database. At

some point the database will start slowing down due to the noisy data.

In order to not resolve the flagNames all the time we have added a small

cache (10s) for feature flag names. This gives a small delay (10s) from

flag is created until we start allow metrics for the flag when

kill-switch is enabled. We should probably listen to the event-stream

and use that invalidate the cache when a flag is created.

## About the changes

Validations in the constructor were executed on the way out (i.e. when

reading users). Instead we should validate when we insert the users.

We're also relaxing the email validation to support top domain emails

(e.g. `...@jp`)

This is implementing the segments events for delta API. Previous version

of delta API, we were just sending all of the segments. Now we will have

`segment-updated` and `segment-removed `events coming to SDK.

This PR sets up the application to accept a value from a variant we

control to set the font size of the application on a global level. If it

fails, the value falls back to the previously set CSS value.

Add new methods to the store behind data usage metrics that accept date

ranges instead of a single month. The old data collection methods

re-route to the new ones instead, so the new methods are tested

implicitly.

Also deprecates the new endpoint that's not in use anywhere except in an

unused service method in Enterprise yet.

## Discussion point:

Accepts from and to params as dates for type safety. You can send unparseable strings, but if you send a date object, you know it'll work.

Leaves the use of the old method in `src/lib/features/instance-stats/instance-stats-service.ts` to keep changes small.

We are changing how the Delta API works, as discussed:

1. We have removed the `updated` and `removed` arrays and now keep

everything in the `events` array.

2. We decided to keep the hydration cache separate from the events array

internally. Since the hydration cache has a special structure and may

contain not just one feature but potentially 1,000 features, it behaved

differently, requiring a lot of special logic to handle it.

3. Implemented `nameprefix` filtering, which we were missing before.

Things still to implement:

1. Segment hydration and updates to it.

Add support for querying the traffic data usage store for the aggregated data for an arbitrary number of months back.

Adds a new `getTrafficDataForMonthRange(monthsBack: number)` method to the store that aggregates data on a monthly basis by status code and traffic group. Returns a new type with month data instead of day data.

This PR implements a first version of the new month/range picker for the

data usage graphs. It's minimally hooked up to the existing

functionality to not take anything away.

This primary purpose of this PR is to get the design and interaction out

on sandbox so that UX can have a look and we can make adjustments.

As such, there are a few things in the code that we'll want to clean up

before removing the flag later:

- for faster iteration, I've used a lot of CSS nesting and element

selectors. this isn't usually how we do it here, so we'll probably want

to extract into styled components later

- there is a temporary override of the value in the period selector so

that you can select ranges. It won't affect the chart state, but it

affects the selector state. Again, this lets you see how it acts and

works.

- I've added a `NewHeader` component because the existing setup smushed

the selector (it's a MUI grid setup, which isn't very flexible). I don't

know what we want to do with this in the end, but the existing chart

*does* have some problems when you resize your window, at least

(although this is likely due to the chart, and can be solved in the same

way that we did for the personal dashboards).

Currently, every time you archived feature, it created

feature-dependencies-removed event.

This PR adds a check to only create events for those features that have

dependency.

We got an event for a scheduled application success today that looked a

little something like this:

> Successfully applied the scheduled change request #1168 in the

production environment in project eg by gaston in project eg.

Notice that we're stating the project twice (once with a link (removed

here) and once without).

This PR removes the redundancy in CR events:

The project is already included in the `changeRequest` variable, which

is populated in `src/lib/addons/feature-event-formatter-md.ts` by

the `generateChangeRequestLink` function.

The (current) definition is:

```typescript

generateChangeRequestLink(event: IEvent): string | undefined {

const { preData, data, project, environment } = event;

const changeRequestId =

data?.changeRequestId || preData?.changeRequestId;

if (project && changeRequestId) {

const url = `${this.unleashUrl}/projects/${project}/change-requests/${changeRequestId}`;

const text = `#${changeRequestId}`;

const featureLink = this.generateFeatureLink(event);

const featureText = featureLink

? ` for feature flag ${this.bold(featureLink)}`

: '';

const environmentText = environment

? ` in the ${this.bold(environment)} environment`

: '';

const projectLink = this.generateProjectLink(event);

const projectText = project

? ` in project ${this.bold(projectLink)}`

: '';

if (this.linkStyle === LinkStyle.SLACK) {

return `${this.bold(`<${url}|${text}>`)}${featureText}${environmentText}${projectText}`;

} else {

return `${this.bold(`[${text}](${url})`)}${featureText}${environmentText}${projectText}`;

}

}

}

```

Which includes links, env, and project info already.

Weird thing is that I could not reproduce it locally, but I have a

theory to fix our delta mismatch.

Revisions are added to the delta every second.

This means that in a single revision, you can disable an event, remove a

dependency, and archive it simultaneously. These actions are usually

performed together since archiving an event will inherently disable it,

remove its dependencies, and so on.

Currently, we observe these events happening within the same revision.

However, since we were checking `.updated` last, the event was always

removed from the `removedMap`.

Now, by checking `.removed` last, the archive action will properly

propagate to the revision.

Our delta API was returning archived feature as updated. Now making sure

we do not put `archived-feature `event into `updated` event array.

Also stop returning removed as complex object.

## About the changes

Moved Open API validation handler to the controller layer to reuse on

all services such as project and segments, and also removed unnecessary

middleware at the top level, `app.ts`, and method, `useErrorHandler` in

`openapi-service.ts`.

### Important files

#### Before

<img width="1510" alt="1 Before"

src="https://github.com/user-attachments/assets/96ac245d-92ac-469e-a097-c6c0b78d0def">

Express cant' parse the path parameter because it doesn't be specified

on the `use` method. Therefore, it returns `undefined` as an error

message.

#### After

<img width="1510" alt="2 After"

src="https://github.com/user-attachments/assets/501dae6c-fef5-4e77-94c3-128a9f7210da">

Express can parse the path parameter because I change to specify it on

the controller layer. Accordingly, it returns `test`.

Trying again, now with a tested function for resolvingIsOss.

Still want to test this on a pro instance in sandbox before we deploy

this to our customers to avoid what happened Friday.

---------

Co-authored-by: Gastón Fournier <gaston@getunleash.io>

When there is new revision, we will start storing memory footprint for

old client-api and the new delta-api.

We will be sending it as prometheus metrics.

The memory size will only be recalculated if revision changes, which

does not happen very often.

## About the changes

According to some logs, sdks can be undefined:

```

TypeError: Cannot read properties of null (reading 'sort')\n at /unleash/node_modules/unleash-server/dist/lib/db/client-applications-store.js:330:22\n

```

This is still raw and experimental.

We started to pull deleted features from event payload.

Now we put full query towards read model.

Co-Author: @FredrikOseberg

This PR refactors the method that listens on revision changes:

- Now supports all environments

- Removed unnecessary populate cache method

# Discussion point

In the listen method, should we implement logic to look into which

environments the events touched? By doing this we would:

- Reduce cache size

- Save some memory/CPU if the environment is not initialized in the

cache, because we could skip the DB calls.

This is based on the exising client feature toggle store, but some

alterations.

1. We support all of the querying it did before.

2. Added support to filter by **featureNames**

3. Simplified logic, so we do not have admin API logic

- no return of tags

- no return of last seen

- no return of favorites

- no playground logic

Next PR will try to include the revision ID.

This is not changing existing logic.

We are creating a new endpoint, which is guarded behind a flag.

---------

Co-authored-by: Simon Hornby <liquidwicked64@gmail.com>

Co-authored-by: FredrikOseberg <fredrik.no@gmail.com>

Added more tests around specific plans. Also added snapshot as per our

conversation @gastonfournier, but I'm unsure how much value it will give

because it seems that the tests should already catch this using

respondWithValidation and the OpenAPI schema. The problem here is that

empty array is a valid state, so there were no reason for the schema to

break the tests.

From 13 seconds to 0.1 seconds.

1. Joining 1 million events to projects/features is slow. **Solved by

using CTE.**

2. Running grouping on 1 million rows is slow. **Solved by adding

index.**

We need this PR to correctly set up CORS for streaming-related endpoints

in our spike and add the flag to our types.

---------

Co-authored-by: kwasniew <kwasniewski.mateusz@gmail.com>

This PR removes all references to the `featuresExportImport` flag.

The flag was introduced in [PR

#3411](https://github.com/Unleash/unleash/pull/3411) on March 29th 2023,

and the flag was archived on April 3rd. The flag has always defaulted to

true.

We've looked at the project that introduced the flag and have spoken to CS about it: we can find no reason to keep the flag around. So well remove it now.

<!-- Thanks for creating a PR! To make it easier for reviewers and

everyone else to understand what your changes relate to, please add some

relevant content to the headings below. Feel free to ignore or delete

sections that you don't think are relevant. Thank you! ❤️ -->

## About the changes

<!-- Describe the changes introduced. What are they and why are they

being introduced? Feel free to also add screenshots or steps to view the

changes if they're visual. -->

### Summary

- Add `PROJECT_ARCHIVED` event on `EVENT_MAP` to use

- Add a test case for `PROJECT_ARCHIVED` event formatting

- Add `PROJECT_ARCHIVED` event when users choose which events they send

to Slack

- Fix Slack integration document by adding `PROJECT_ARCHIVED`

### Example

The example message looks like the image below. I covered my email with

a black rectangle to protect my privacy 😄

The link refers `/projects-archive` to see archived projects.

<img width="529" alt="Slack message example"

src="https://github.com/user-attachments/assets/938c639f-f04a-49af-9b4a-4632cdea9ca7">

## Discussion points

<!-- Anything about the PR you'd like to discuss before it gets merged?

Got any questions or doubts? -->

I considered the reason why Unleash didn't implement to send

`PROJECT_ARCHIVED` message to Slack integration.

One thing I assumed that it is impossible to create a new project with

open source codes, which means it is only enabled in the enterprise

plan. However,

[document](https://docs.getunleash.io/reference/integrations/slack-app#events)

explains that users can send `PROJECT_CREATED` and `PROJECT_DELETED`

events to Slack, which are also available only in the enterprise plan,

hence it means we need to embrace all worthwhile events.

I think it is reasonable to add `PROJECT_ARCHIVED` event to Slack

integration because users, especially operators, need to track them

through Slack by separating steps, `_CREATED`, `_ARCHIVED`, and

`_DELETED`.

We want to prevent our users from defining multiple templates with the

same name. So this adds a unique index on the name column when

discriminator is template.

This PR fixes three things that were wrong with the lifecycle summary

count query:

1. When counting the number of flags in each stage, it does not take

into account whether a flag has moved out of that stage. So if you have

a flag that's gone through initial -> pre-live -> live, it'll be counted

for each one of those steps, not just the last one.

2. Some flags that have been archived don't have the corresponding

archived state row in the db. This causes them to count towards their

other recorded lifecycle stages, even when they shouldn't. This is

related to the previous one, but slightly different. Cross-reference the

features table's archived_at to make sure it hasn't been archived

3. The archived number should probably be all flags ever archived in the

project, regardless of whether they were archived before or after

feature lifecycles. So we should check the feature table's archived_at

flag for the count there instead

This PR:

- conditionally deprecates the project health report endpoint. We only

use this for technical debt dashboard that we're removing. Now it's

deprecated once you turn the simplifiy flag on.

- extracts the calculate project health function into the project health

functions file in the appropriate domain folder. That same function is

now shared by the project health service and the project status service.

For the last point, it's a little outside of how we normally do things,

because it takes its stores as arguments, but it slots in well in that

file. An option would be to make a project health read model and then

wire that up in a couple places. It's more code, but probably closer to

how we do things in general. That said, I wanted to suggest this because

it's quick and easy (why do much work when little work do trick?).

This PR updates the project status service (and schemas and UI) to use

the project's current health instead of the 4-week average.

I nabbed the `calculateHealthRating` from

`src/lib/services/project-health-service.ts` instead of relying on the

service itself, because that service relies on the project service,

which relies on pretty much everything in the entire system.

However, I think we can split the health service into a service that

*does* need the project service (which is used for 1 of 3 methods) and a

service (or read model) that doesn't. We could then rely on the second

one for this service without too much overhead. Or we could extract the

`calculateHealthRating` into a shared function that takes its stores as

arguments. ... but I suggest doing that in a follow-up PR.

Because the calculation has been tested other places (especially if we

rely on a service / shared function for it), I've simplified the tests

to just verify that it's present.

I've changed the schema's `averageHealth` into an object in case we want

to include average health etc. in the future, but this is up for debate.

This PR fixes the counting of unhealthy flags for the project status

page. The issue was that we were looking for `archived = false`, but we

don't set that flag in the db anymore. Instead, we set the `archived_at`

date, which should be null if the flag is unarchived.

**This migration introduces a query that calculates the licensed user

counts and inserts them into the licensed_users table.**

**The logic ensures that:**

1. All users created up to a specific date are included as active users

until they are explicitly deleted.

2. Deleted users are excluded after their deletion date, except when

their deletion date falls within the last 30 days or before their

creation date.

3. The migration avoids duplicating data by ensuring records are only

inserted if they don’t already exist in the licensed_users table.

**Logic Breakdown:**

**Identify User Events (user_events):** Extracts email addresses from

user-related events (user-created and user-deleted) and tracks the type

and timestamp of the event. This step ensures the ability to

differentiate between user creation and deletion activities.

**Generate a Date Range (dates):** Creates a continuous range of dates

spanning from the earliest recorded event up to the current date. This

ensures we analyze every date, even those without events.

**Determine Active Users (active_emails):** Links dates with user events

to calculate the status of each email address (active or deleted) on a

given day. This step handles:

- The user's creation date.

- The user's deletion date (if applicable).

**Calculate Daily Active User Counts (result):**

For each date, counts the distinct email addresses that are active based

on the conditions:

- The user has no deletion date.

- The user's deletion date is within the last 30 days relative to the

current date.

- The user's creation date is before the deletion date.

This PR adds the option to select potentially stale flags from the UI.

It also updates the name we use for parsing from the API: instead of

`potentiallyStale` we use `potentially-stale`. This follows the

precedent set by "kill switch" (which we send as 'kill-switch'), the

only other multi-word option that I could find in our filters.

This PR adds support for the `potentiallyStale` value in the feature

search API. The value is added as a third option for `state` (in

addition to `stale` and `active`). Potentially stale is a subset of

active flags, so stale flags are never considered potentially stale,

even if they have the flag set in the db.

Because potentially stale is a separate column in the db, this

complicates the query a bit. As such, I've created a specialized

handling function in the feature search store: if the query doesn't

include `potentiallyStale`, handle it as we did before (the mapping has

just been moved). If the query *does* contain potentially stale, though,

the handling is quite a bit more involved because we need to check

multiple different columns against each other.

In essence, it's based on this logic:

when you’re searching for potentially stale flags, you should only get flags that are active and marked as potentially stale. You should not get stale flags.

This can cause some confusion, because in the db, we don’t clear the potentially stale status when we mark a flag as stale, so we can get flags that are both stale and potentially stale.

However, as a user, if you’re looking for potentially stale flags, I’d be surprised to also get (only some) stale flags, because if a flag is stale, it’s definitely stale, not potentially stale.

This leads us to these six different outcomes we need to handle when your search includes potentially stale and stale or active:

1. You filter for “potentially stale” flags only. The API will give you only flags that are active and marked as potentially stale. You will not get stale flags.

2. You filter only for flags that are not potentially stale. You will get all flags that are active and not potentially stale and all stale flags.

3. You search for “is any of stale, potentially stale”. This is our “unhealthy flags” metric. You get all stale flags and all flags that are active and potentially stale

4. You search for “is none of stale, potentially stale”: This gives you all flags that are active and not potentially stale. Healthy flags, if you will.

5. “is any of active, potentially stale”: you get all active flags. Because we treat potentially stale as a subset of active, this is the same as “is active”

6. “is none of active, potentially stale”: you get all stale flags. As in the previous point, this is the same as “is not active”

This change adds a db migration to make the potentially_stale column

non-nullable. It'll set any NULL values to `false`.

In the down-migration, make the column nullable again.

## About the changes

- Remove `idNumberMiddleware` method and change to use `parameters`

field in `openApiService.validPath` method for the flexibility.

- Remove unnecessary `Number` type converting method and change them to

use `<{id: number}>` to specify the type.

### Reference

The changed response looks like the one below.

```JSON

{

"id":"8174a692-7427-4d35-b7b9-6543b9d3db6e",

"name":"BadDataError",

"message":"Request validation failed: your request body or params contain invalid data. Refer to the `details` list for more information.",

"details":[

{

"message":"The `/params/id` property must be integer. You sent undefined.",

"path":"/params/id"

}

]

}

```

I think it might be better to customize the error response, especially

`"You sent undefined."`, on another pull request if this one is

accepted. I prefer to separate jobs to divide the context and believe

that it helps reviewer easier to understand.

Remove everything related to the connected environment count for project

status. We decided that because we don't have anywhere to link it to at

the moment, we don't want to show it yet.

## About the changes

Builds on top of #8766 to use memoized results from stats-service.

Because stats service depends on version service, and to avoid making

the version service depend on stats service creating a cyclic

dependency. I've introduced a telemetry data provider. It's not clean

code, but it does the job.

After validating this works as expected I'll clean up

Added an e2e test validating that the replacement is correct:

[8475492](847549234c)

and it did:

https://github.com/Unleash/unleash/actions/runs/11861854341/job/33060032638?pr=8776#step:9:294

Finally, cleaning up version service

## About the changes

Our stats are used for many places and many times to publish prometheus

metrics and some other things.

Some of these queries are heavy, traversing all tables to calculate

aggregates.

This adds a feature flag to be able to memoize 1 minute (by default) how

long to keep the calculated values in memory.

We can use the key of the function to individually control which ones

are memoized or not and for how long using a numeric variant.

Initially, this will be disabled and we'll test in our instances first

This PR adds stale flag count to the project status payload. This is

useful for the project status page to show the number of stale flags in

the project.

Adding email_hash column to users table.

We will update all existing users to have hashed email.

All new users will also get the hash.

We are fine to use md5, because we just need uniqueness. We have emails

in events table stored anyways, so it is not sensitive.

This PR moves the project lifecycle summary to its own subdirectory and

adds files for types (interface) and a fake implementation.

It also adds a query for archived flags within the last 30 days taken

from `getStatusUpdates` in `src/lib/features/project/project-service.ts`

and maps the gathered data onto the expected structure. The expected

types have also been adjusted to account for no data.

Next step will be hooking it up to the project status service, adding

schema, and exposing it in the controller.

This PR adds more tests to check a few more cases for the lifecycle

calculation query. Specifically, it tests that:

- If we don't have any data for a stage, we return `null`.

- We filter on projects

- It correctly takes `0` days into account when calculating averages.

This PR adds a project lifecycle read model file along with the most

important (and most complicated) query that runs with it: calculating

the average time spent in each stage.

The calculation relies on the following:

- when calculating the average of a stage, only flags who have gone into

a following stage are taken into account.

- we'll count "next stage" as the next row for the same feature where

the `created_at` timestamp is higher than the current row

- if you skip a stage (go straight to live or archived, for instance),

that doesn't matter, because we don't look at that.

The UI only shows the time spent in days, so I decided to go with

rounding to days directly in the query.

## Discussion point:

This one uses a subquery, but I'm not sure it's possible to do without

it. However, if it's too expensive, we can probably also cache the value

somehow, so it's not calculated more than every so often.

This change introduces a new method `countProjectTokens` on the

`IApiTokenStore` interface. It also swaps out the manual filtering for

api tokens belonging to a project in the project status service.

Do not count stale flags as potentially stale flags to remove

duplicates.

Stale flags feel like more superior state and it should not show up

under potentially stale.

This PR adds member, api token, and segment counts to the project status

payload. It updates the schemas and adds the necessary stores to get

this information. It also adds a new query to the segments store for

getting project segments.

I'll add tests in a follow-up.

As part of the release plan template work. This PR adds the three events

for actions with the templates.

Actually activating milestones should probably trigger existing

FeatureStrategyAdd events when adding and FeatureStrategyRemove when

changing milestones.

This PR wires up the connectedenvironments data from the API to the

resources widget.

Additionally, it adjusts the orval schema to add the new

connectedEnvironments property, and adds a loading state indicator for

the resource values based on the project status endpoint response.

As was discussed in a previous PR, I think this is a good time to update

the API to include all the information required for this view. This

would get rid of three hooks, lots of loading state indicators (because

we **can** do them individually; check out

0a334f9892)

and generally simplify this component a bit.

Here's the loading state:

In some cases, people want to disable database migration. For example,

some people or companies want to grant whole permissions to handle the

schema by DBAs, not by application level hence I use

`parseEnvVarBoolean` to handle `disableMigration` option by environment

variable. I set the default value as `false` for the backward

compatibility.

This PR adds connected environments to the project status payload.

It's done by:

- adding a new `getConnectedEnvironmentCountForProject` method to the

project store (I opted for this approach instead of creating a new view

model because it already has a `getEnvironmentsForProject` method)

- adding the project store to the project status service

- updating the schema

For the schema, I opted for adding a `resources` property, under which I

put `connectedEnvironments`. My thinking was that if we want to add the

rest of the project resources (that go in the resources widget), it'd

make sense to group those together inside an object. However, I'd also

be happy to place the property on the top level. If you have opinions

one way or the other, let me know.

As for the count, we're currently only counting environments that have

metrics and that are active for the current project.

This was an oversight. The test would always fail after 2024-11-04,

because yesterday is no longer 2024-11-03. This way, we're using the

same string in both places.

Adding project status schema definition, controller, service, e2e test.

Next PR will add functionality for activity object.

---------

Co-authored-by: Thomas Heartman <thomas@getunleash.io>

The two lints being turned off are new for 1.9.x and caused a massive

diff inside frontend if activated. To reduce impact, these were turned off for

the merge. We might want to look at turning them back on once we're

ready to have a semantic / a11y refactor of our frontend.