Path types in our openapi are inferred as string (which is a sensible

default). But we can be more specific and provide the right type for

each parameter. This is one example of how we can do that

This PR fixes an issue where the number of flags belonging to a project

was wrong in the new getProjectsForAdminUi.

The cause was that we now join with the events table to get the most

"lastUpdatedAt" data. This meant that we got multiple rows for each

flag, so we counted the same flag multiple times. The fix was to use a

"distinct".

Additionally, I used this as an excuse to write some more tests that I'd

been thinking about. And in doing so also uncovered another bug that

would only ever surface in verrry rare conditions: if a flag had been

created in project A, but moved to project B AND the

feature-project-change event hadn't fired correctly, project B's last

updated could show data from that feature in project A.

I've also taken the liberty of doing a little bit of cleanup.

## About the changes

When storing last seen metrics we no longer validate at insert time that

the feature exists. Instead, there's a job cleaning up on a regular

interval.

Metrics for features with more than 255 characters, makes the whole

batch to fail, resulting in metrics being lost.

This PR helps mitigate the issue while also logs long name feature names

Implements empty responses for the fake project read model. Instead of

throwing a not implemented error, we'll return empty results.

This makes some of the tests in enterprise pass.

This PR touches up a few small things in the project read model.

Fixes:

Use the right method name in the query/method timer for

`getProjectsForAdminUi`. I'd forgotten to change the timer name from the

original method name.

Spells the method name correctly for the `getMembersCount` timer (it

used to be `getMemberCount`, but the method is callled `getMembersCount`

with a plural s).

Changes:

Call the `getMembersCount` timer from within the `getMembersCount`

method itself. Instead of setting that timer up from two different

places, we can call it in the method we're timing. This wasn't a problem

previously, because the method was only called from a single place.

Assuming we always wanna time that query, it makes more sense to put the

timing in the actual method.

Hooks up the new project read model and updates the existing project

service to use it instead when the flag is on.

In doing:

- creates a composition root for the read model

- includes it in IUnleashStores

- updates some existing methods to accept either the old or the new

model

- updates the OpenAPI schema to deprecate the old properties

These are both related to the work on the project list improvements

project.

The `projectListImprovements` flag will be used to enable disable the

new project list improvements.

The `useProjectReadModel` flag will be used to enable/disable the use

of the new project read model and is mostly a safety feature.

Creates a new project read model exposing data to be used for the UI and

for the insights module.

The model contains two public methods, both based on the project store's

`getProjectsWithCounts`:

- `getProjectsForAdminUi`

- `getProjectsForInsights`

This mirrors the two places where the base query is actually in use

today and adapts the query to those two explicit cases.

The new `getProjectsForAdminUi` method also contains data for last flag

update and last flag metric reported, as required for the new projects

list screen.

Additionally the read model contains a private `getMembersCount` method,

which is also lifted from the project store. This method was only used

in the old `getProjectsWithCounts` method, so I have also removed the

method from the public interface.

This PR does *not* hook up the new read model to anything or delete any

existing uses of the old method.

## Why?

As mentioned in the background, this query is used in two places, both

to get data for the UI (directly or indirectly). This is consistent with

the principles laid out in our [ADR on read vs write

models](https://docs.getunleash.io/contributing/ADRs/back-end/write-model-vs-read-models).

There is an argument to be made, however, that the insights module uses

this as an **internal** read model, but the description of an internal

model ("Internal read models are typically narrowly focused on answering

one question and usually require simple queries compared to external

read models") does not apply here. It's closer to the description of

external read models: "View model will typically join data across a few

DB tables" for display in the UI.

## Discussion points

### What about properties on the schema that are now gone?

The `project-schema`, which is delivered to the UI through the

`getProjects` endpoint (and nowhere else, it seems), describes

properties that will no longer be sent to the front end, including

`defaultStickiness`, `avgTimeToProduction`, and more. Can we just stop

sending them or is that a breaking change?

The schema does not define them as required properties, so in theory,

not sending them isn't breaking any contracts. We can deprecate the

properties and just not populate them anymore.

At least that's my thought on it. I'm open to hearing other views.

### Can we add the properties in fewer lines of code?

Yes! The [first commit in this PR

(b7534bfa)](b7534bfa07)

adds the two new properties in 8 lines of code.

However, this comes at the cost of diluting the `getProjectsWithCounts`

method further by adding more properties that are not used by the

insights module. That said, that might be a worthwhile tradeoff.

## Background

_(More [details in internal slack

thread](https://unleash-internal.slack.com/archives/C046LV6HH6W/p1723716675436829))_

I noticed that the project store's `getProjectWithCounts` is used in

exactly two places:

1. In the project service method which maps directly to the project

controller (in both OSS and enterprise).

2. In the insights service in enterprise.

In the case of the controller, that’s the termination point. I’d guess

that when written, the store only served the purpose of showing data to

the UI.

In the event of the insights service, the data is mapped in

getProjectStats.

But I was a little surprised that they were sharing the same query, so I

decided to dig a little deeper to see what we’re actually using and what

we’re not (including the potential new columns). Here’s what I found.

Of the 14 already existing properties, insights use only 7 and the

project list UI uses only 10 (though the schema mentions all 14 (as far

as I could tell from scouring the code base)). Additionally, there’s two

properties that I couldn’t find any evidence of being used by either:

- default stickiness

- updatedAt (this is when the project was last updated; not its flags)

During adding privateProjectsChecker, I saw that events composition root

is not used almost at all.

Refactored code so we do not call new EventService anymore.

<!-- Thanks for creating a PR! To make it easier for reviewers and

everyone else to understand what your changes relate to, please add some

relevant content to the headings below. Feel free to ignore or delete

sections that you don't think are relevant. Thank you! ❤️ -->

## About the changes

When reading feature env strategies and there's no segments it returns

empty list of segments now. Previously it was undefined leading to

overly verbose change request diffs.

<img width="669" alt="Screenshot 2024-08-14 at 16 06 14"

src="https://github.com/user-attachments/assets/1ac6121b-1d6c-48c6-b4ce-3f26c53c6694">

### Important files

<!-- PRs can contain a lot of changes, but not all changes are equally

important. Where should a reviewer start looking to get an overview of

the changes? Are any files particularly important? -->

## Discussion points

<!-- Anything about the PR you'd like to discuss before it gets merged?

Got any questions or doubts? -->

https://linear.app/unleash/issue/2-2518/figure-out-how-to-create-the-initial-admin-user-in-unleash

The logic around `initAdminUser` that was introduced in

https://github.com/Unleash/unleash/pull/4927 confused me a bit. I wrote

new tests with what I assume are our expectations for this feature and

refactored the code accordingly, but would like someone to confirm that

it makes sense to them as well.

The logic was split into 2 different methods: one to get the initial

invite link, and another to send a welcome email. Now these two methods

are more granular than the previous alternative and can be used

independently of creating a new user.

---------

Co-authored-by: Gastón Fournier <gaston@getunleash.io>

For easy gitar integration, we need to have boolean in the event

payload.

We might rethink it when we add variants, but currently enabled with

variants is not used.

Changes the event search handling, so that searching by user uses the

user's ID, not the "createdBy" name in the event. This aligns better

with what the OpenAPI schema describes it.

Encountered this case after encrypting an already long email address.

This should mitigate the issue in demo instance. I don't think it's a

big issue to ignore the length when validating an email address cause

this is already limited at the DB layer by the column length

Adds an endpoint to return all event creators.

An interesting point is that it does not return the user object, but

just created_by as a string. This is because we do not store user IDs

for events, as they are not strictly bound to a user object, but rather

a historical user with the name X.

Previously people were able to send random data to feature type. Now it

is validated.

Fixes https://github.com/Unleash/unleash/issues/7751

---------

Co-authored-by: Thomas Heartman <thomas@getunleash.io>

Changed the url of event search to search/events to align with

search/features. With that created a search controller to keep all

searches under there.

Added first test.

This PR adds Grafana gauges for all the existing resource limits. The

primary purpose is to be able to use this in alerting. Secondarily, we

can also use it to get better insights into how many customers have

increased their limits, as well as how many people are approaching their

limit, regdardless of whether it's been increased or not.

## Discussion points

### Implementation

The first approach I took (in

87528b4c67),

was to add a new gauge for each resource limit. However, there's a lot

of boilerplate for it.

I thought doing it like this (the current implementation) would make it

easier. We should still be able to use the labelName to collate this in

Grafana, as far as I understand? As a bonus, we'd automatically get new

resource limits when we add them to the schema.

``` tsx

const resourceLimit = createGauge({

name: 'resource_limit',

help: 'The maximum number of resources allowed.',

labelNames: ['resource'],

});

// ...

for (const [resource, limit] of Object.entries(config.resourceLimits)) {

resourceLimit.labels({ resource }).set(limit);

}

```

That way, when checking the stats, we should be able to do something

like this:

``` promql

resource_limit{resource="constraintValues"}

```

### Do we need to reset gauges?

I noticed that we reset gauges before setting values in them all over

the place. I don't know if that's necessary. I'd like to get that double

clarified before merging this.

https://linear.app/unleash/issue/2-2501/adapt-origin-middleware-to-stop-logging-ui-requests-and-start

This adapts the new origin middleware to stop logging UI requests (too

noisy) and adds new Prometheus metrics.

<img width="745" alt="image"

src="https://github.com/user-attachments/assets/d0c7f51d-feb6-4ff5-b856-77661be3b5a9">

This should allow us to better analyze this data. If we see a lot of API

requests, we can dive into the logs for that instance and check the

logged data, like the user agent.

This PR adds some helper methods to make listening and emitting metric

events more strict in terms of types. I think it's a positive change

aligned with our scouting principle, but if you think it's complex and

does not belong here I'm happy with dropping it.

Add ability to format format event as Markdown in generic webhooks,

similar to Datadog integration.

Closes https://github.com/Unleash/unleash/issues/7646

Co-authored-by: Nuno Góis <github@nunogois.com>

https://linear.app/unleash/issue/2-2469/keep-the-latest-event-for-each-integration-configuration

This makes it so we keep the latest event for each integration

configuration, along with the previous logic of keeping the latest 100

events of the last 2 hours.

This should be a cheap nice-to-have, since now we can always know what

the latest integration event looked like for each integration

configuration. This will tie-in nicely with the next task of making the

latest integration event state visible in the integration card.

Also improved the clarity of the auto-deletion explanation in the modal.

This PR adds the UI part of feature flag collaborators. Collaborators are hidden on windows smaller than size XL because we're not sure how to deal with them in those cases yet.

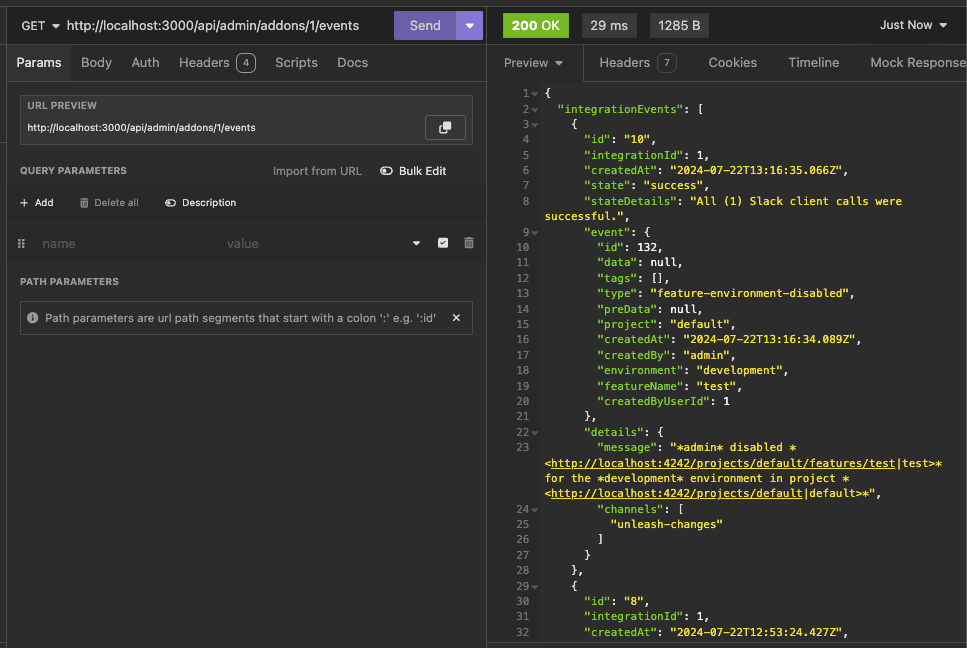

https://linear.app/unleash/issue/2-2439/create-new-integration-events-endpointhttps://linear.app/unleash/issue/2-2436/create-new-integration-event-openapi-schemas

This adds a new `/events` endpoint to the Addons API, allowing us to

fetch integration events for a specific integration configuration id.

Also includes:

- `IntegrationEventsSchema`: New schema to represent the response object

of the list of integration events;

- `yarn schema:update`: New `package.json` script to update the OpenAPI

spec file;

- `BasePaginationParameters`: This is copied from Enterprise. After

merging this we should be able to refactor Enterprise to use this one

instead of the one it has, so we don't repeat ourselves;

We're also now correctly representing the BIGSERIAL as BigInt (string +

pattern) in our OpenAPI schema. Otherwise our validation would complain,

since we're saying it's a number in the schema but in fact returning a

string.

This PR allows you to gradually lower constraint values, even if they're

above the limits.

It does, however, come with a few caveats because of how Unleash deals

with constraints:

Constraints are just json blobs. They have no IDs or other

distinguishing features. Because of this, we can't compare the current

and previous state of a specific constraint.

What we can do instead, is to allow you to lower the amount of

constraint values if and only if the number of constraints hasn't

changed. In this case, we assume that you also haven't reordered the

constraints (not possible from the UI today). That way, we can compare

constraint values between updated and existing constraints based on

their index in the constraint list.

It's not foolproof, but it's a workaround that you can use. There's a

few edge cases that pop up, but that I don't think it's worth trying to

cover:

Case: If you **both** have too many constraints **and** too many

constraint values

Result: You won't be allowed to lower the amount of constraints as long

as the amount of strategy values is still above the limit.

Workaround: First, lower the amount of constraint values until you're

under the limit and then lower constraints. OR, set the constraint you

want to delete to a constraint that is trivially true (e.g. `currentTime

> yesterday` ). That will essentially take that constraint out of the

equation, achieving the same end result.

Case: You re-order constraints and at least one of them has too many

values

Result: You won't be allowed to (except for in the edge case where the

one with too many values doesn't move or switches places with another

one with the exact same amount of values).

Workaround: We don't need one. The order of constraints has no effect on

the evaluation.

https://linear.app/unleash/issue/2-2450/register-integration-events-webhook

Registers integration events in the **Webhook** integration.

Even though this touches a lot of files, most of it is preparation for

the next steps. The only actual implementation of registering

integration events is in the **Webhook** integration. The rest will

follow on separate PRs.

Here's an example of how this looks like in the database table:

```json

{

"id": 7,

"integration_id": 2,

"created_at": "2024-07-18T18:11:11.376348+01:00",

"state": "failed",

"state_details": "Webhook request failed with status code: ECONNREFUSED",

"event": {

"id": 130,

"data": null,

"tags": [],

"type": "feature-environment-enabled",

"preData": null,

"project": "default",

"createdAt": "2024-07-18T17:11:10.821Z",

"createdBy": "admin",

"environment": "development",

"featureName": "test",

"createdByUserId": 1

},

"details": {

"url": "http://localhost:1337",

"body": "{ \"id\": 130, \"type\": \"feature-environment-enabled\", \"createdBy\": \"admin\", \"createdAt\": \"2024-07-18T17: 11: 10.821Z\", \"createdByUserId\": 1, \"data\": null, \"preData\": null, \"tags\": [], \"featureName\": \"test\", \"project\": \"default\", \"environment\": \"development\" }"

}

}

```

This PR updates the limit validation for constraint numbers on a single

strategy. In cases where you're already above the limit, it allows you

to still update the strategy as long as you don't add any **new**

constraints (that is: the number of constraints doesn't increase).

A discussion point: I've only tested this with unit tests of the method

directly. I haven't tested that the right parameters are passed in from

calling functions. The main reason being that that would involve

updating the fake strategy and feature stores to sync their flag lists

(or just checking that the thrown error isn't a limit exceeded error),

because right now the fake strategy store throws an error when it

doesn't find the flag I want to update.

https://linear.app/unleash/issue/2-2453/validate-patched-data-against-schema

This adds schema validation to patched data, fixing potential issues of

patching data to an invalid state.

This can be easily reproduced by patching a strategy constraints to be

an object (invalid), instead of an array (valid):

```sh

curl -X 'PATCH' \

'http://localhost:4242/api/admin/projects/default/features/test/environments/development/strategies/8cb3fec6-c40a-45f7-8be0-138c5aaa5263' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '[

{

"path": "/constraints",

"op": "replace",

"from": "/constraints",

"value": {}

}

]'

```

Unleash will accept this because there's no validation that the patched

data actually looks like a proper strategy, and we'll start seeing

Unleash errors due to the invalid state.

This PR adapts some of our existing logic in the way we handle

validation errors to support any dynamic object. This way we can perform

schema validation with any object and still get the benefits of our

existing validation error handling.

This PR also takes the liberty to expose the full instancePath as

propertyName, instead of only the path's last section. We believe this

has more upsides than downsides, especially now that we support the

validation of any type of object.

This PR adds prometheus metrics for when users attempt to exceed the

limits for a given resource.

The implementation sets up a second function exported from the

ExceedsLimitError file that records metrics and then throws the error.

This could also be a static method on the class, but I'm not sure that'd

be better.

This PR updates the OpenAPI error converter to also work for errors with

query parameters.

We previously only sent the body of the request along with the error,

which meant that query parameter errors would show up incorrectly.

For instance given a query param with the date format and the invalid

value `01-2020-01`, you'd previously get the message:

> The `from` value must match format "date". You sent undefined

With this change, you'll get this instead:

> The `from` value must match format "date". You sent "01-2020-01".

The important changes here are two things:

- passing both request body and query params

- the 3 lines in `fromOpenApiValidationError` that check where we should

get the value you sent from.

The rest of it is primarily updating tests to send the right arguments

and some slight rewording to more accurately reflect that this can be

either request body or query params.

Our CSP reports that unsafe-inline is not recommended for styleSrc. This

PR adds a flag for making it possible to remove this element of our CSP

headers. It should allow us to see what (if anything) breaks hard.

We'll store hashes for the last 5 passwords, fetch them all for the user

wanting to change their password, and make sure the password does not

verify against any of the 5 stored hashes.

Includes some password-related UI/UX improvements and refactors. Also

some fixes related to reset password rate limiting (instead of an

unhandled exception), and token expiration on error.

---------

Co-authored-by: Nuno Góis <github@nunogois.com>

PR #7519 introduced the pattern of using `createApiTokenService` instead

of newing it up. This usage was introduced in a concurrent PR (#7503),

so we're just cleaning up and making the usage consistent.

Deletes API tokens bound to specific projects when the last project they're mapped to is deleted.

---------

Co-authored-by: Tymoteusz Czech <2625371+Tymek@users.noreply.github.com>

Co-authored-by: Thomas Heartman <thomas@getunleash.io>

If you have SDK tokens scoped to projects that are deleted, you should

not get access to any flags with those.

---------

Co-authored-by: David Leek <david@getunleash.io>

This PR adds a feature flag limit to Unleash. It's set up to be

overridden in Enterprise, where we turn the limit up.

I've also fixed a couple bugs in the fake feature flag store.

This adds an extended metrics format to the metrics ingested by Unleash

and sent by running SDKs in the wild. Notably, we don't store this

information anywhere new in this PR, this is just streamed out to

Victoria metrics - the point of this project is insight, not analysis.

Two things to look out for in this PR:

- I've chosen to take extend the registration event and also send that

when we receive metrics. This means that the new data is received on

startup and on heartbeat. This takes us in the direction of collapsing

these two calls into one at a later point

- I've wrapped the existing metrics events in some "type safety", it

ain't much because we have 0 type safety on the event emitter so this

also has some if checks that look funny in TS that actually check if the

data shape is correct. Existing tests that check this are more or less

preserved

This PR adds the back end for API token resource limits.

It adds the limit to the schema and checks the limit in the service.

## Discussion points

The PAT service uses a different service and different store entirely,

so I have not included testing any edge cases where PATs are included.

However, that could be seen as "knowing too much". We could add tests

that check both of the stores in tandem, but I think it's overkill for

now.

This PR updates the Unleash UI to use the new environment limit.

As it turns out, we already had an environment limit in the UI, but it

was hardcoded (luckily, its value is the same as the new default value

🥳).

In addition to the existing places this limit was used, it also disables

the "new environment" button if you've reached the limit. Because this

limit already exists, I don't think we need a flag for it. The only

change is that you can't click a button (that should be a link!) that

takes you to a page you can't do anything on.

This PR adds limits for environments to the resource limit schema. The

actual limiting will have to be done in Enterprise, however, so this is

just laying the groundwork.

This fixes the issue where project names that are 100 characters long

or longer would cause the project creation to fail. This is because

the resulting ID would be longer than the 100 character limit imposed

by the back end.

We solve this by capping the project ID to 90 characters, which leaves

us with 10 characters for the suffix, meaning you can have 1 billion

projects (999,999,999 + 1) that start with the same 90

characters (after slugification) before anything breaks.

It's a little shorter than what it strictly has to be (we could

probably get around with 95 characters), but at this point, you're

reaching into edge case territory anyway, and I'd rather have a little

too much wiggle room here.

This PR removes the last two flags related to the project managament

improvements project, making the new project creation form GA.

In doing so, we can also delete the old project creation form (or at

least the page, the form is still in use in the project settings).

This PR:

- adds a flag to anonymize user emails in the new project cards

- performs the anonymization using the existing `anonymise` function

that we have.

It does not anonymize the system user, nor does it anonymize groups. It

does, however, leave the gravatar url unchanged, as that is already

hashed (but we may want to hide that too).

This PR also does not affect the user's name or username. Considering

the target is the demo instance where the vast majority of users don't

have this (and if they do, they've chosen to set it themselves), this

seems an appropriate mitigation.

With the flag turned off:

With the flag on:

Fix project role assignment for users with `ADMIN` permission, even if

they don't have the Admin root role. This happens when e.g. users

inherit the `ADMIN` permission from a group root role, but are not

Admins themselves.

---------

Co-authored-by: Gastón Fournier <gaston@getunleash.io>

This PR adds metrics tracking for:

- "maxConstraintValues": the highest number of constraint values that

are in use

- "maxConstraintsPerStrategy": the highest number of constraints used on

a strategy

It updates the existing feature strategy read model that returns max

metrics for other strategy-related things.

It also moves one test into a more fitting describe block.

Instead of running exists on every row, we are joining the exists, which

runs the query only once.

This decreased load time on my huge dataset from 2000ms to 200ms.

Also added tests that values still come through as expected.

Instead of running exists on every row, we are joining the exists, which

runs the query only once.

This decreased load time on my huge dataset from 2000ms to 200ms.

Also added tests that values still come through as expected.

**Upgrade to React v18 for Unleash v6. Here's why I think it's a good

time to do it:**

- Command Bar project: We've begun work on the command bar project, and

there's a fantastic library we want to use. However, it requires React

v18 support.

- Straightforward Upgrade: I took a look at the upgrade guide

https://react.dev/blog/2022/03/08/react-18-upgrade-guide and it seems

fairly straightforward. In fact, I was able to get React v18 running

with minimal changes in just 10 minutes!

- Dropping IE Support: React v18 no longer supports Internet Explorer

(IE), which is no longer supported by Microsoft as of June 15, 2022.

Upgrading to v18 in v6 would be a good way to align with this change.

TS updates:

* FC children has to be explicit:

https://stackoverflow.com/questions/71788254/react-18-typescript-children-fc

* forcing version 18 types in resolutions:

https://sentry.io/answers/type-is-not-assignable-to-type-reactnode/

Test updates:

* fixing SWR issue that we have always had but it manifests more in new

React (https://github.com/vercel/swr/issues/2373)

---------

Co-authored-by: kwasniew <kwasniewski.mateusz@gmail.com>

This PR fixes how Unleash handles tag values. Specifically, it does

these things:

1. Trims leading and trailing whitespace from tag values before

inserting them into the database

2. Updates OpenAPI validation to not allow whitespace-only and to ignore

leading and trailing whitespace

Additionally, it moves the tag length constants into the constants file

from the Joi tag schema file. This is because importing the values

previously rendered them as undefined (probably due to a circular

dependency somewhere in the system). This means that the previous values

were also ignored by OpenAPI.

UI updates reflecting this wil follow.

## Background

When you tag a flag, there's nothing stopping you from using an entirely

empty tag or a tag with leading/trailing whitespace.

Empty tags make little sense and leading trailing whitespace differences

are incredibly subtle:

Additionally, leading and trailing whitespace is not shown in the

dropdown list, so you'd have to guess at which is the right one.

Joining might not always be the best solution. If a table contains too

much data, and you later run sorting on top of it, it will be slow.

In this case, we will first reduce the instances table to a minimal

version because instances usually share the same SDK versions. Only

after that, we join.

Based on some customer data, we reduced query time from 3000ms to 60ms.

However, this will vary based on the number of instances the customer

has.

This PR removes the flag for the new project card design, making it GA.

It also removes deprecated components and updates one reference (in the

groups card) to the new components instead.

This PR removes all the feature flags related to the project list split

and updates the snapshot.

Now the project list will always contain "my projects" and "other

projects"

## About the changes

After an internal conversation, we concluded that syncExternalGroups is

an action that Unleash performs as a system, not something triggered by

the user. We keep the method and just write the event log that the

action was performed by the system user.

## About the changes

Removes the deprecated state endpoint, state-service (despite the

service itself not having been marked as deprecated), and the file

import in server-impl. Leaves a TODO in place of where file import was

as traces for a replacement file import based on the new import/export

functionality

Removes /edge/metrics. This has been superseded by

/api/client/metrics/bulk. Once this is merged, Unleash 6.0 will require

Edge > 17.0.0. (We recommend at least v19.1.3)

We are keeping the UI hidden for mdsol behind kill switch, but I feel

like we can remove the flag completely for backend, so everyone will

keep collecting data.

Co-authored-by: Gitar Bot <noreply@gitar.co>

## About the changes

This aligns us with the requirement of having ip in all events. After

tackling the enterprise part we will be able to make the ip field

mandatory here:

2c66a4ace4/src/lib/types/events.ts (L362)

In preparation for v6, this PR removes usage and references to

`error.description` instead favoring `error.message` (as mentioned

#4380)

I found no references in the front end, so this might be (I believe it

to be) all the required changes.

This PR is part of #4380 - Remove legacy `/api/feature` endpoint.

## About the changes

### Frontend

- Removes the useFeatures hook

- Removes the part of StrategyView that displays features using this

strategy (not been working since v4.4)

- Removes 2 unused features entries from routes

### Backend

- Removes the /api/admin/features endpoint

- Moves a couple of non-feature related tests (auth etc) to use

/admin/projects endpoint instead

- Removes a test that was directly related to the removed endpoint

- Moves a couple of tests to the projects/features endpoint

- Reworks some tests to fetch features from projects features endpoint

and strategies from project strategies

## About the changes

EdgeService is the only place where we use active tokens validation in

bulk. By switching to validating from the cache, we no longer need a

method to return all active tokens from the DB.

## About the changes

We've identified that Bearer token middleware is not working for

/enterprise instance.

Looking at a few lines below:

88e3b1b79e/src/lib/app.ts (L81-L84)

we can see that we were missing the basePath in the use definition.

## About the changes

Current state, when returning the HTML entry point from the server,

there are no headers attached. We encountered an issue with a deployment

and this had an impact for us.

A brief description:

1. We deployed the most recent version. Noticed an unrelated issue.

2. Users tried to use the most recent version and due to their client

cache, requested assets that did not exist in the newest version.

3. Our cache layer cached the assets that were not there with the HTML

response. It had to infer the type based on the filename because there

was no attached `Content-Type` header. This cache was very sticky.

4. After rolling back we saw the HTML response (from the cache) instead

of the appropriate response from the upstream Unleash application.

This PR does a few things.

1. When responding with the HTML entry point, it adds header

(`Content-Type: text/html`).

2. When the client is requesting an asset (a path that ends with an

extension), it also instructs the resource not to be cached

(`Cache-Control: no-cache`) and returns a 404. This will prevent misses

from getting cached.

## Discussion points

To me, there doesn't seem to be a lot of test infra on serving the SPA

application. If that is an error, please indicate where that is and an

appropriate test can be added.

Adds a postgres_version gauge to allow us to see postgres_version in

prometheus and to post it upstream when version checking. Depends on

https://github.com/bricks-software/version-function/pull/20 to be merged

first to ensure our version-function doesn't crash when given the

postgres-version data.

1. Added new schema and tests

2. Controller also accepts the data

3. Also sending fake data from frontend currently

Next steps, implement service/store layer and frontend

This fixes the case when a customer have thousands of strategies causing

the react UI to crash. We still consider it incorrect to use that amount

of strategies and this is more a workaround to help the customer out of

a crashing state.

We put it behind a flag called `manyStrategiesPagination` and plan to

only enable it for the customer in trouble.

Now we are also sending project id to prometheus, also querying from

database. This sets us up for grafana dashboard.

Also put the metrics behind flag, just incase it causes cpu/memory

issues.

This PR updates the project service to automatically create a project id

if it is not provided. The feature is behind a flag. If an ID is

provided, it will still attempt to use that ID instead.

This PR adds a function to automatically generate a project ID on

creation. Using this when the id is missing will be handled in following

PRs.

The function uses the existing `slug` package to create a slug, and then

takes the 12 characters of a uuidv4 string to generate an ID.

The included tests check that the 12 character hash is added and that

the resulting string is url friendly (by checking that

`encodeURIComponent` doesn't change it).

We could also test a lot of edge cases (such as dealing with double

spaces, trimming the string, etc), but I think that's better handled by

the library itself (but you can check out what I removed in

2d9bcb6390

for an idea).

The function doesn't really need to be in the service; it could be moved to a util. But for proximity, I'll create it here first.

Regarding ticket #6892:

I would like to enable the use of a CA certificate without requiring

other certificates. This would be useful for AWS Helm, as AWS only

provides a single PEM file for DB connections.

Final rank has always been ordering correctly by default. But after 5.12

I see some issues that sometimes it is not ordered. Just to be extra

sure, I am for ordering it.

Add a flag to enable/disable the new UI for project creation.

This flag is separate from the impl on the back end so that we can

enable one without the other (but uses flag dependencies in Unleash, so

that we can never enable the new UI without the new back end).

I have not set the flag to `true` in server startup because the form

doesn't work yet, so it's a manual step for now.

This PR removes the workaround introduced in

https://github.com/Unleash/unleash/pull/6931. After

https://github.com/ivarconr/unleash-enterprise/pull/1268 has been

merged, this should be safe to apply.

Notably, this PR:

- tightens up the type for the enable change request function, so we can

use that to inform the code

- skips trying to do anything with an empty array

The last point is less important than it might seem because both the env

validation and the current implementation of the callback is essentially

a no-op when there are no envs. However, that's hard to enforce. If we

just exit out early, then at least we know nothing happens.

Optionally, we could do something like this instead, but I'm not sure

it's better or worse. Happy to take input.

```ts

const crEnvs = newProject.changeRequestEnvironments ?? []

await this.validateEnvironmentsExist(crEnvs.map((env) => env.name));

const changeRequestEnvironments =

await enableChangeRequestsForSpecifiedEnvironments(crEnvs,);

data.changeRequestEnvironments = changeRequestEnvironments;

```

This PR improves the handling of change request enables on project

creation in two ways:

1. We now verify that the envs you try to enable CRs for exist before

passing them on to the enterprise functionality.

2. We include data about environments and change request environments in

the project created events.

Due to how we handle redirects of embedded proxy, we ended up counting

the same request twice. This PR adds a boolean to res.locals which we

then check if set to avoid double counting.

## About the changes

What's going on is the following:

1. When a token is not found in the token's cache we try to find it in

the db

2. To prevent a denial of service attack using invalid tokens, we cache

the invalid tokens so we don't hit the db.

3. The issue is that we stored this token in the cache regardless we

found it or not. And if the token was valid the first time we'd add a

timestamp to avoid querying this token again the next time.

4. The next iteration the token should be in the cache:

54383a6578/src/lib/services/api-token-service.ts (L162)

but for some reason it is not and therefore we have to make a query. But

this is where the query prevention mechanism kicks in because it finds

the token in the cache and kicks us out. This PR fixes this by only

storing in the cache for misses if not found:

54383a6578/src/lib/services/api-token-service.ts (L164-L165)

The token was added to the cache because we were not checking if it had

expired. Now we added a check and we also have a log for expired tokens.

Some improvement opportunities:

- I don't think we display that a token has expired in the UI which

probably led to this issue

- When a token expired we don't display a specific error message or

error response saying that which is not very helpful for users

This PR introduces a configuration option (`authentication.demoAllowAdminLogin`) that allows you to log in as admin when using demo authentication. To do this, use the username `admin`.

## About the changes

The `admin` user currently cannot be accessed in `demo` authentication

mode, as the auth mode requires only an email to log in, and the admin

user is not created with an email. This change allows for logging in as

the admin user only if an `AUTH_DEMO_ALLOW_ADMIN_LOGIN` is set to `true`

(or the corresponding `authDemoAllowAdminLogin` config is enabled).

<!-- Does it close an issue? Multiple? -->

Closes#6398

### Important files

[demo-authentication.ts](https://github.com/Unleash/unleash/compare/main...00Chaotic:unleash:feat/allow_admin_login_using_demo_auth?expand=1#diff-c166f00f0a8ca4425236b3bcba40a8a3bd07a98d067495a0a092eec26866c9f1R25)

## Discussion points

Can continue discussion of [this

comment](https://github.com/Unleash/unleash/pull/6447#issuecomment-2042405647)

in this PR.

---------

Co-authored-by: Thomas Heartman <thomasheartman+github@gmail.com>

This commit adds an `environments` property to the project created

payload. The list contains only the projects that the project has

enabled.

The point of adding it is that it gives you a better overview over

what you have created.

This PR adds the `projectListNewCards` flag to the constant defined in

`experimental.ts`. This should allow the API to pass that value to the

front end.

## About the changes

Add time metrics to relevant queries:

- get

- getAll

- bulkInsert

- count

- exists

- get

Ignored because might not be that relevant:

- insert

- delete

- deleteAll

- update

## About the changes

This PR removes the feature flag `queryMissingTokens` that was fully

rolled out.

It introduces a new way of checking edgeValidTokens controlled by the

flag `checkEdgeValidTokensFromCache` that relies in the cached data but

hits the DB if needed.

The assumption is that most of the times edge will find tokens in the

cache, except for a few cases in which a new token is queried. From all

tokens we expect at most one to hit the DB and in this case querying a

single token should be better than querying all the tokens.

This makes it configurable either through a single JSON file with all

three certificates as separate keys or via separate files per

ca/cert/key key.

fixes#6718

I've tried to use/add the audit info to all events I could see/find.

This makes this PR necessarily huge, because we do store quite a few

events.

I realise it might not be complete yet, but tests

run green, and I think we now have a pattern to follow for other events.

This PR adds an optional function parameter to the `createProject`

function that is intended to enable change requests for the newly

created project.

The assumption is that all the logic within will be decided in the

enterprise impl. The only thing we want to verify here is that it is

called after the project has been created.

This PR adds functionality to the `createProject` function to choose

which environments should be enabled when you create a new project. The

new `environments` property is optional and omitting it will make it

work exactly as it does today.

The current implementation is fairly strict. We have some potential

ideas to make it easier to work with, but we haven't agreed on any yet.

Making it this strict means that we can always relax the rules later.

The rules are (codified in tests):

- If `environments` is not provided, all non-deprecated environments are

enabled

- If `environments` is provided, only the environments listed are

enabled, regardless of whether they're deprecated or not

- If `environments` is provided and is an empty array, the service

throws an error. The API should dilsallow that via the schema anyway,

but this catches it in case it sneaks in some other way.

- If `environments` is provided and contains one or more environments

that don't exist, the service throws an error. While we could ignore

them, that would lead to more complexity because we'd have to also check

that the at least one of the environments is valid. It also leads to

silent ignoring of errors, which may or may not be good for the user

experience.

The API endpoint for this sits in enterprise, so no customer-facing

changes are part of this.

We encountered an issue with a customer because this query was returning

3 million rows. The problem arose from each instance reporting

approximately 100 features, with a total of 30,000 instances. The query

was joining these, thus multiplying the data. This approach was fine for

a reasonable number of instances, but in this extreme case, it did not

perform well.

This PR modifies the logic; instead of performing outright joins, we are

now grouping features by environment into an array, resulting in just

one row returned per instance.

I tested locally with the same dataset. Previously, loading this large

instance took about 21 seconds; now it has reduced to 2 seconds.

Although this is still significant, the dataset is extensive.

Previously, we were not validating that the ID was a number, which

sometimes resulted in returning our database queries (source code) to

the frontend. Now, we have validation middleware.

Previously, we were extracting the project from the token, but now we

will retrieve it from the session, which contains the full list of

projects.

This change also resolves an issue we encountered when the token was a

multi-project token, formatted as []:dev:token. Previously, it was

unable to display the exact list of projects. Now, it will show the

exact project names.

<details>

<summary>Feature Flag Cleanup</summary>

| Stale Flag | Value |

| ---------- | ------- |

| stripClientHeadersOn304 | true |

</details>

<details>

<summary>Trigger</summary>

https://github.com/Unleash/unleash/issues/6559#issuecomment-2058848984

</details>

<details>

<summary>Bot Commands</summary>

`@gitar-bot cleanup stale_flag=value` will cleanup a stale feature flag.

Replace `stale_flag` with the name of the stale feature flag and `value`

with either `true` or `false`.

</details>

---------

Co-authored-by: Gitar Bot <noreply@gitar.co>

## About the changes

- Removes the feature flag for the created_by migrations.

- Adds a configuration option in IServerOption for

`ENABLE_SCHEDULED_CREATED_BY_MIGRATION` that defaults to `false`

- the new configuration option when set on startup enables scheduling of

the two created_by migration services (features+events)

- Removes the dependency on flag provider in EventStore as it's no

longer needed

- Adds a brief description of the new configuration option in

`configuring-unleash.md`

- Sets the events created_by migration interval to 15 minutes, up from

2.

---------

Co-authored-by: Gastón Fournier <gaston@getunleash.io>

## About the changes

This PR provides a service that allows a scheduled function to run in a

single instance. It's currently not in use but tests show how to wrap a

function to make it single-instance:

65b7080e05/src/lib/features/scheduler/job-service.test.ts (L26-L32)

The key `'test'` is used to identify the group and most likely should

have the same name as the scheduled job.

---------

Co-authored-by: Christopher Kolstad <chriswk@getunleash.io>

This PR adds a counter in Prometheus for counting the number of

"environment disabled" events we get per project. The purpose of this is

to establish a baseline for one of the "project management UI" project's

key results.

## On gauges vs counters

This PR uses a counter. Using a gauge would give you the total number of

envs disabled, not the number of disable events. The difference is

subtle, but important.

For projects that were created before the new feature, the gauge might

be appropriate. Because each disabled env would require at least one

disabled event, we can get a floor of how many events were triggered for

each project.

However, for projects created after we introduce the planned change,

we're not interested in the total envs anymore, because you can disable

a hundred envs on creation with a single action. In this case, a gauge

showing 100 disabled envs would be misleading, because it didn't take

100 events to disable them.

So the interesting metric here is how many times did you specifically

disable an environment in project settings, hence the counter.

## Assumptions and future plans

To make this easier on ourselves, we make the follow assumption: people

primarily disable envs **when creating a project**.

This means that there might be a few lagging indicators granting some

projects a smaller number of events than expected, but we may be able to

filter those out.

Further, if we had a metric for each project and its creation date, we

could correlate that with the metrics to answer the question "how many

envs do people disable in the first week? Two weeks? A month?". Or

worded differently: after creating a project, how long does it take for

people to configure environments?

Similarly, if we gather that data, it will also make filtering out the

number of events for projects created **after** the new changes have

been released much easier.

The good news: Because the project creation metric with dates is a

static aggregate, it can be applied at any time, even retroactively, to

see the effects.

This PR expands upon #6773 by returning the list of removed properties

in the API response. To achieve this, I added a new top-level `warnings`

key to the API response and added an `invalidContextProperties` property

under it. This is a list with the keys that were removed.

## Discussion points

**Should we return the type of each removed key's value?** We could

expand upon this by also returning the type that was considered invalid

for the property, e.g. `invalidProp: 'object'`. This would give us more

information that we could display to the user. However, I'm not sure

it's useful? We already return the input as-is, so you can always

cross-check. And the only type we allow for non-`properties` top-level

properties is `string`. Does it give any useful info? I think if we want

to display this in the UI, we might be better off cross-referencing with

the input?

**Can properties be invalid for any other reason?** As far as I can

tell, that's the only reason properties can be invalid for the context.

OpenAPI will prevent you from using a type other than string for the

context fields we have defined and does not let you add non-string

properties to the `properties` object. So all we have to deal with are

top-level properties. And as long as they are strings, then they should

be valid.

**Should we instead infer the diff when creating the model?** In this

first approach, I've amended the `clean-context` function to also return

the list of context fields it has removed. The downside to this approach

is that we need to thread it through a few more hoops. Another approach

would be to compare the input context with the context used to evaluate

one of the features when we create the view model and derive the missing

keys from that. This would probably work in 98 percent of cases.

However, if your result contains no flags, then we can't calculate the

diff. But maybe that's alright? It would likely be fewer lines of code

(but might require additional testing), although picking an environment

from feels hacky.

Don't include invalid context properties in the contexts that we

evaluate.

This PR removes any non-`properties` fields that have a non-string

value.

This prevents the front end from crashing when trying to render an

object.

Expect follow-up PRs to include more warnings/diagnostics we can show to

the end user to inform them of what fields have been removed and why.

## About the changes

This PR establishes a simple yet effective mechanism to avoid DDoS

against our DB while also protecting against memory leaks.

This will enable us to release the flag `queryMissingTokens` to make our

token validation consistent across different nodes

---------

Co-authored-by: Nuno Góis <github@nunogois.com>

Converts `newContextFieldUI` release flag to

`disableShowContextFieldSelectionValues` kill switch.

The kill switch controls whether we show the value selection above the

search filed when > 100 values

---------

Signed-off-by: andreas-unleash <andreas@getunleash.ai>

Adds a bearer token middleware that adds support for tokens prefixed

with "Bearer" scheme. Prefixing with "Bearer" is optional and the old

way of authenticating still works, so we now support both ways.

Also, added as part of our OpenAPI spec which now displays authorization

as follows:

Related to #4630. Doesn't fully close the issue as we're still using

some invalid characters for the RFC, in particular `*` and `[]`

For safety reasons this is behind a feature flag

---------

Co-authored-by: Gastón Fournier <gaston@getunleash.io>

This change fixes the OpenAPI schema to disallow non-string properties

on the top level of the context (except, of course, the `properties`

object).

This means that we'll no longer be seeing issues with rendering

invalid contexts, because we don't accept them in the first place.

This solution comes with some tradeoffs discussed in the [PR](https://github.com/Unleash/unleash/pull/6676). Following on from that, this solution isn't optimal, but it's a good stop gap. A better solution (proposed in the PR discussion) has been added as an idea for future projects.

The bulk of the discussion around the solution is included here for reference:

@kwasniew:

Was it possible to pass non string properties with our UI before?

Is there a chance that something will break after this change?

@thomasheartman:

Good question and good looking out 😄

You **could** pass non-string, top-level properties into the API before. In other words, this would be allowed:

```js

{

appName: "my-app",

nested: { object: "accepted" }

}

```

But notably, non-string values under `properties` would **not** be accepted:

```js

{

appName: "my-app",

properties: {

nested: { object: "not accepted" }

}

}

```

**However**, the values would not contribute to the evaluation of any constraints (because their type is invalid), so they would effectively be ignored.

Now, however, you'll instead get a 400 saying that the "nested" value must be a string.

I would consider this a bug fix because:

- if you sent a nested object before, it was most likely an oversight

- if you sent the nested object on purpose, expecting it to work, you would be perplexed as to why it didn't work, as the API accepted it happily

Furthermore, the UI will also tell you that the property must be a string now if you try to do it from the UI.

On the other hand, this does mean that while you could send absolute garbage in before and we would just ignore it, we don't do that anymore. This does go against how we allow you to send anything for pretty much all other objects in our API.

However, the SDK context is special. Arbitrary keys aren't ignored, they're actually part of the context itself and as such should have a valid value.

So if anything breaks, I think it breaks in a way that tells you why something wasn't working before. However, I'd love to hear your take on it and we can re-evaluate whether this is the right fix, if you think it isn't.

@kwasniew:

Coming from the https://en.wikipedia.org/wiki/Robustness_principle mindset I'm thinking if ignoring the fields that are incorrect wouldn't be a better option. So we'd accept incorrect value and drop it instead of:

* failing with client error (as this PR) or

* saving incorrect value (as previous code we had)

@thomasheartman:

Yeah, I considered that too. In fact, that was my initial idea (for the reason you stated). However, there's a couple tradeoffs here (as always):

1. If we just ignore those values, the end user doesn't know what's happened unless they go and dig through the responses. And even then, they don't necessarily know why the value is gone.

2. As mentioned, for the context, arbitrary keys can't be ignored, because we use them to build the context. In other words, they're actually invalid input.

Now, I agree that you should be liberal in what you accept and try to handle things gracefully, but that means you need to have a sensible default to fall back to. Or, to quote the Wikipedia article (selectively; with added emphasis):

> programs that receive messages should accept non-conformant input **as long as the meaning is clear**.

In this case, the meaning isn't clear when you send extra context values that aren't strings.

For instance, what's the meaning here:

```js

{

appName: "my-app",

nested: { object: "accepted", more: { further: "nesting" } }

}

```

If you were trying to use the `nested` value as an object, then that won't work. Ideally, you should be alerted.

Should we "unwind" the object and add all string keys as context values? That doesn't sound very feasible **or** necessarily like the right thing.

Did you just intend to use the `appName` and for the `nested` object to be ignored?

And it's because of this caveat that I'm not convinced just ignoring the keys are the right thing to do. Because if you do, the user never knows they were ignored or why.

----

**However**, I'd be in favor of ignoring they keys if we could **also** give the users warnings at the same time. (Something like what we do in the CR api, right? Success with warnings?)

If we can tell the user that "we ignored the `a`, `b`, and `c` keys in the context you sent because they are invalid values. Here is the result of the evaluation without taking those keys into account: [...]", then I think that's the ideal solution.

But of course, the tradeoff is that that increases the complexity of the API and the complexity of the task. It also requires UI adjustments etc. This means that it's not a simple fix anymore, but more of a mini-project.

But, in the spirit of the playground, I think it would be a worthwhile thing to do because it helps people learn and understand how Unleash works.

Via the API you can currently create gradualRollout strategies without

any parameters set, when visiting the UI afterwards, you can edit this,

because the UI reads the parameter list from the database and sees that

some parameters are required, and refuses to accept the data. This PR

adds defaults for gradualRollout strategies created from the API, making

sure gradual rollout strategies always have `rollout`, `groupId` and

`stickiness` set.

Provides store method for retrieving traffic usage data based on

period parameter, and UI + ui hook with the new chart for displaying

traffic usage data spread out over selectable month.

In this PR we copied and adapted a plugin written by DX for highlighting

a column in the chart:

There are some minor improvements planned which will come in a separate

PR, reversing the order in legend and tooltip so the colors go from

light to dark, and adding a month -sum below the legend

## Discussion points

- Should any of this be extracted as a separate reusable component?

---------

Co-authored-by: Nuno Góis <github@nunogois.com>

## About the changes

There seems to be a typo in the authorization header. We're keeping the

old typo as preferred just in case, but if not present we'll default to

the authorization header (not authorisation).

Not sure about the impact of this bug, as all registrations might be

using default project.

## About the changes

We see some logs with: `Failed to store events: Error: The query is

empty` which suggests we're not sending events to batchStore. This will

help us confirm that and will give us better insights