We need this PR to correctly set up CORS for streaming-related endpoints

in our spike and add the flag to our types.

---------

Co-authored-by: kwasniew <kwasniewski.mateusz@gmail.com>

This PR removes all references to the `featuresExportImport` flag.

The flag was introduced in [PR

#3411](https://github.com/Unleash/unleash/pull/3411) on March 29th 2023,

and the flag was archived on April 3rd. The flag has always defaulted to

true.

We've looked at the project that introduced the flag and have spoken to CS about it: we can find no reason to keep the flag around. So well remove it now.

<!-- Thanks for creating a PR! To make it easier for reviewers and

everyone else to understand what your changes relate to, please add some

relevant content to the headings below. Feel free to ignore or delete

sections that you don't think are relevant. Thank you! ❤️ -->

## About the changes

<!-- Describe the changes introduced. What are they and why are they

being introduced? Feel free to also add screenshots or steps to view the

changes if they're visual. -->

### Summary

- Add `PROJECT_ARCHIVED` event on `EVENT_MAP` to use

- Add a test case for `PROJECT_ARCHIVED` event formatting

- Add `PROJECT_ARCHIVED` event when users choose which events they send

to Slack

- Fix Slack integration document by adding `PROJECT_ARCHIVED`

### Example

The example message looks like the image below. I covered my email with

a black rectangle to protect my privacy 😄

The link refers `/projects-archive` to see archived projects.

<img width="529" alt="Slack message example"

src="https://github.com/user-attachments/assets/938c639f-f04a-49af-9b4a-4632cdea9ca7">

## Discussion points

<!-- Anything about the PR you'd like to discuss before it gets merged?

Got any questions or doubts? -->

I considered the reason why Unleash didn't implement to send

`PROJECT_ARCHIVED` message to Slack integration.

One thing I assumed that it is impossible to create a new project with

open source codes, which means it is only enabled in the enterprise

plan. However,

[document](https://docs.getunleash.io/reference/integrations/slack-app#events)

explains that users can send `PROJECT_CREATED` and `PROJECT_DELETED`

events to Slack, which are also available only in the enterprise plan,

hence it means we need to embrace all worthwhile events.

I think it is reasonable to add `PROJECT_ARCHIVED` event to Slack

integration because users, especially operators, need to track them

through Slack by separating steps, `_CREATED`, `_ARCHIVED`, and

`_DELETED`.

We want to prevent our users from defining multiple templates with the

same name. So this adds a unique index on the name column when

discriminator is template.

This PR fixes three things that were wrong with the lifecycle summary

count query:

1. When counting the number of flags in each stage, it does not take

into account whether a flag has moved out of that stage. So if you have

a flag that's gone through initial -> pre-live -> live, it'll be counted

for each one of those steps, not just the last one.

2. Some flags that have been archived don't have the corresponding

archived state row in the db. This causes them to count towards their

other recorded lifecycle stages, even when they shouldn't. This is

related to the previous one, but slightly different. Cross-reference the

features table's archived_at to make sure it hasn't been archived

3. The archived number should probably be all flags ever archived in the

project, regardless of whether they were archived before or after

feature lifecycles. So we should check the feature table's archived_at

flag for the count there instead

This PR:

- conditionally deprecates the project health report endpoint. We only

use this for technical debt dashboard that we're removing. Now it's

deprecated once you turn the simplifiy flag on.

- extracts the calculate project health function into the project health

functions file in the appropriate domain folder. That same function is

now shared by the project health service and the project status service.

For the last point, it's a little outside of how we normally do things,

because it takes its stores as arguments, but it slots in well in that

file. An option would be to make a project health read model and then

wire that up in a couple places. It's more code, but probably closer to

how we do things in general. That said, I wanted to suggest this because

it's quick and easy (why do much work when little work do trick?).

This PR updates the project status service (and schemas and UI) to use

the project's current health instead of the 4-week average.

I nabbed the `calculateHealthRating` from

`src/lib/services/project-health-service.ts` instead of relying on the

service itself, because that service relies on the project service,

which relies on pretty much everything in the entire system.

However, I think we can split the health service into a service that

*does* need the project service (which is used for 1 of 3 methods) and a

service (or read model) that doesn't. We could then rely on the second

one for this service without too much overhead. Or we could extract the

`calculateHealthRating` into a shared function that takes its stores as

arguments. ... but I suggest doing that in a follow-up PR.

Because the calculation has been tested other places (especially if we

rely on a service / shared function for it), I've simplified the tests

to just verify that it's present.

I've changed the schema's `averageHealth` into an object in case we want

to include average health etc. in the future, but this is up for debate.

This PR fixes the counting of unhealthy flags for the project status

page. The issue was that we were looking for `archived = false`, but we

don't set that flag in the db anymore. Instead, we set the `archived_at`

date, which should be null if the flag is unarchived.

**This migration introduces a query that calculates the licensed user

counts and inserts them into the licensed_users table.**

**The logic ensures that:**

1. All users created up to a specific date are included as active users

until they are explicitly deleted.

2. Deleted users are excluded after their deletion date, except when

their deletion date falls within the last 30 days or before their

creation date.

3. The migration avoids duplicating data by ensuring records are only

inserted if they don’t already exist in the licensed_users table.

**Logic Breakdown:**

**Identify User Events (user_events):** Extracts email addresses from

user-related events (user-created and user-deleted) and tracks the type

and timestamp of the event. This step ensures the ability to

differentiate between user creation and deletion activities.

**Generate a Date Range (dates):** Creates a continuous range of dates

spanning from the earliest recorded event up to the current date. This

ensures we analyze every date, even those without events.

**Determine Active Users (active_emails):** Links dates with user events

to calculate the status of each email address (active or deleted) on a

given day. This step handles:

- The user's creation date.

- The user's deletion date (if applicable).

**Calculate Daily Active User Counts (result):**

For each date, counts the distinct email addresses that are active based

on the conditions:

- The user has no deletion date.

- The user's deletion date is within the last 30 days relative to the

current date.

- The user's creation date is before the deletion date.

This PR adds the option to select potentially stale flags from the UI.

It also updates the name we use for parsing from the API: instead of

`potentiallyStale` we use `potentially-stale`. This follows the

precedent set by "kill switch" (which we send as 'kill-switch'), the

only other multi-word option that I could find in our filters.

This PR adds support for the `potentiallyStale` value in the feature

search API. The value is added as a third option for `state` (in

addition to `stale` and `active`). Potentially stale is a subset of

active flags, so stale flags are never considered potentially stale,

even if they have the flag set in the db.

Because potentially stale is a separate column in the db, this

complicates the query a bit. As such, I've created a specialized

handling function in the feature search store: if the query doesn't

include `potentiallyStale`, handle it as we did before (the mapping has

just been moved). If the query *does* contain potentially stale, though,

the handling is quite a bit more involved because we need to check

multiple different columns against each other.

In essence, it's based on this logic:

when you’re searching for potentially stale flags, you should only get flags that are active and marked as potentially stale. You should not get stale flags.

This can cause some confusion, because in the db, we don’t clear the potentially stale status when we mark a flag as stale, so we can get flags that are both stale and potentially stale.

However, as a user, if you’re looking for potentially stale flags, I’d be surprised to also get (only some) stale flags, because if a flag is stale, it’s definitely stale, not potentially stale.

This leads us to these six different outcomes we need to handle when your search includes potentially stale and stale or active:

1. You filter for “potentially stale” flags only. The API will give you only flags that are active and marked as potentially stale. You will not get stale flags.

2. You filter only for flags that are not potentially stale. You will get all flags that are active and not potentially stale and all stale flags.

3. You search for “is any of stale, potentially stale”. This is our “unhealthy flags” metric. You get all stale flags and all flags that are active and potentially stale

4. You search for “is none of stale, potentially stale”: This gives you all flags that are active and not potentially stale. Healthy flags, if you will.

5. “is any of active, potentially stale”: you get all active flags. Because we treat potentially stale as a subset of active, this is the same as “is active”

6. “is none of active, potentially stale”: you get all stale flags. As in the previous point, this is the same as “is not active”

This change adds a db migration to make the potentially_stale column

non-nullable. It'll set any NULL values to `false`.

In the down-migration, make the column nullable again.

## About the changes

- Remove `idNumberMiddleware` method and change to use `parameters`

field in `openApiService.validPath` method for the flexibility.

- Remove unnecessary `Number` type converting method and change them to

use `<{id: number}>` to specify the type.

### Reference

The changed response looks like the one below.

```JSON

{

"id":"8174a692-7427-4d35-b7b9-6543b9d3db6e",

"name":"BadDataError",

"message":"Request validation failed: your request body or params contain invalid data. Refer to the `details` list for more information.",

"details":[

{

"message":"The `/params/id` property must be integer. You sent undefined.",

"path":"/params/id"

}

]

}

```

I think it might be better to customize the error response, especially

`"You sent undefined."`, on another pull request if this one is

accepted. I prefer to separate jobs to divide the context and believe

that it helps reviewer easier to understand.

Remove everything related to the connected environment count for project

status. We decided that because we don't have anywhere to link it to at

the moment, we don't want to show it yet.

## About the changes

Builds on top of #8766 to use memoized results from stats-service.

Because stats service depends on version service, and to avoid making

the version service depend on stats service creating a cyclic

dependency. I've introduced a telemetry data provider. It's not clean

code, but it does the job.

After validating this works as expected I'll clean up

Added an e2e test validating that the replacement is correct:

[8475492](847549234c)

and it did:

https://github.com/Unleash/unleash/actions/runs/11861854341/job/33060032638?pr=8776#step:9:294

Finally, cleaning up version service

## About the changes

Our stats are used for many places and many times to publish prometheus

metrics and some other things.

Some of these queries are heavy, traversing all tables to calculate

aggregates.

This adds a feature flag to be able to memoize 1 minute (by default) how

long to keep the calculated values in memory.

We can use the key of the function to individually control which ones

are memoized or not and for how long using a numeric variant.

Initially, this will be disabled and we'll test in our instances first

This PR adds stale flag count to the project status payload. This is

useful for the project status page to show the number of stale flags in

the project.

Adding email_hash column to users table.

We will update all existing users to have hashed email.

All new users will also get the hash.

We are fine to use md5, because we just need uniqueness. We have emails

in events table stored anyways, so it is not sensitive.

This PR moves the project lifecycle summary to its own subdirectory and

adds files for types (interface) and a fake implementation.

It also adds a query for archived flags within the last 30 days taken

from `getStatusUpdates` in `src/lib/features/project/project-service.ts`

and maps the gathered data onto the expected structure. The expected

types have also been adjusted to account for no data.

Next step will be hooking it up to the project status service, adding

schema, and exposing it in the controller.

This PR adds more tests to check a few more cases for the lifecycle

calculation query. Specifically, it tests that:

- If we don't have any data for a stage, we return `null`.

- We filter on projects

- It correctly takes `0` days into account when calculating averages.

This PR adds a project lifecycle read model file along with the most

important (and most complicated) query that runs with it: calculating

the average time spent in each stage.

The calculation relies on the following:

- when calculating the average of a stage, only flags who have gone into

a following stage are taken into account.

- we'll count "next stage" as the next row for the same feature where

the `created_at` timestamp is higher than the current row

- if you skip a stage (go straight to live or archived, for instance),

that doesn't matter, because we don't look at that.

The UI only shows the time spent in days, so I decided to go with

rounding to days directly in the query.

## Discussion point:

This one uses a subquery, but I'm not sure it's possible to do without

it. However, if it's too expensive, we can probably also cache the value

somehow, so it's not calculated more than every so often.

This change introduces a new method `countProjectTokens` on the

`IApiTokenStore` interface. It also swaps out the manual filtering for

api tokens belonging to a project in the project status service.

Do not count stale flags as potentially stale flags to remove

duplicates.

Stale flags feel like more superior state and it should not show up

under potentially stale.

This PR adds member, api token, and segment counts to the project status

payload. It updates the schemas and adds the necessary stores to get

this information. It also adds a new query to the segments store for

getting project segments.

I'll add tests in a follow-up.

As part of the release plan template work. This PR adds the three events

for actions with the templates.

Actually activating milestones should probably trigger existing

FeatureStrategyAdd events when adding and FeatureStrategyRemove when

changing milestones.

This PR wires up the connectedenvironments data from the API to the

resources widget.

Additionally, it adjusts the orval schema to add the new

connectedEnvironments property, and adds a loading state indicator for

the resource values based on the project status endpoint response.

As was discussed in a previous PR, I think this is a good time to update

the API to include all the information required for this view. This

would get rid of three hooks, lots of loading state indicators (because

we **can** do them individually; check out

0a334f9892)

and generally simplify this component a bit.

Here's the loading state:

In some cases, people want to disable database migration. For example,

some people or companies want to grant whole permissions to handle the

schema by DBAs, not by application level hence I use

`parseEnvVarBoolean` to handle `disableMigration` option by environment

variable. I set the default value as `false` for the backward

compatibility.

This PR adds connected environments to the project status payload.

It's done by:

- adding a new `getConnectedEnvironmentCountForProject` method to the

project store (I opted for this approach instead of creating a new view

model because it already has a `getEnvironmentsForProject` method)

- adding the project store to the project status service

- updating the schema

For the schema, I opted for adding a `resources` property, under which I

put `connectedEnvironments`. My thinking was that if we want to add the

rest of the project resources (that go in the resources widget), it'd

make sense to group those together inside an object. However, I'd also

be happy to place the property on the top level. If you have opinions

one way or the other, let me know.

As for the count, we're currently only counting environments that have

metrics and that are active for the current project.

This was an oversight. The test would always fail after 2024-11-04,

because yesterday is no longer 2024-11-03. This way, we're using the

same string in both places.

Adding project status schema definition, controller, service, e2e test.

Next PR will add functionality for activity object.

---------

Co-authored-by: Thomas Heartman <thomas@getunleash.io>

The two lints being turned off are new for 1.9.x and caused a massive

diff inside frontend if activated. To reduce impact, these were turned off for

the merge. We might want to look at turning them back on once we're

ready to have a semantic / a11y refactor of our frontend.

Archived features can be searched now.

This is the backend and small parts of frontend preparing to add

filters, buttons etc in next PR.

---------

Co-authored-by: Thomas Heartman <thomas@getunleash.io>

This change fixes a bug in the event filter's `to` query parameter.

The problem was that in an attempt to make it inclusive, we also

stripped it of the `IS:` prefix, which meant it had no effect. This

change fixes that by first splitting the value, fixing only the

date (because we want it to include the entire day), and then joining

it back together.

We do some validation on flag names, but there's some cases that slip

through. These are some cases that we should handle better.

With `..` as a name, you can't go into the flag in Unleash and you can't

activate any environments because the it is interpreted as "go up a

level".

## About the changes

We have many aggregation queries that run on a schedule:

f63496d47f/src/lib/metrics.ts (L714-L719)

These staticCounters are usually doing db query aggregations that

traverse tables and we run all of them in parallel:

f63496d47f/src/lib/metrics.ts (L410-L412)

This can add strain to the db. This PR suggests a way of handling these

queries in a more structured way, allowing us to run them sequentially

(therefore spreading the load):

f02fe87835/src/lib/metrics-gauge.ts (L38-L40)

As an additional benefit, we get both the gauge definition and the

queries in a single place:

f02fe87835/src/lib/metrics.ts (L131-L141)

This PR only tackles 1 metric, and it only focuses on gauges to gather

initial feedback. The plan is to migrate these metrics and eventually

incorporate more types (e.g. counters)

---------

Co-authored-by: Nuno Góis <github@nunogois.com>

Give the ability to change when users are considered inactive via an

environment variable `USER_INACTIVITY_THRESHOLD_IN_DAYS` or

configuration option: `userInactivityThresholdInDays`. Default remains

180 days

## About the changes

This fixes#8029. How to reproduce the issue is in the ticket.

The issue happens because when a web app is hosted in the same domain as

Unleash UI and the web app uses unleash SDK to make requests to Unleash,

the browser automatically includes the cookie in the request headers,

because:

- The request URL matches the cookie's Path attribute (which it does in

this case).

- The request is sent to the same domain (which it is, since both apps

are under the same domain).

And this is by design in the HTTP cookie specification:

https://datatracker.ietf.org/doc/html/rfc6265

This PR avoids overriding the API user with the session user if there's

already an API user in the request. It's an alternative to

https://github.com/Unleash/unleash/pull/8434Closes#8029

This gives us better types for our wrapTimer function.

Maybe the type `(args: any) => any` could also be improved

---------

Co-authored-by: Nuno Góis <nuno@getunleash.io>

https://linear.app/unleash/issue/2-2787/add-openai-api-key-to-our-configuration

Adds the OpenAI API key to our configuration and exposes a new

`unleashAIAvailable` boolean in our UI config to let our frontend know

that we have configured this. This can be used together with our flag to

decide whether we should enable our experiment for our users.

This PR fixes a bug where the default project would have no listed

owners. The issue was that the default project has no user owners by

default, so we didn't get a result back when looking for user owners.

Now we check whether we have any owners for that project, and if we

don't, then we return the system user as an owner instead.

This also fixes an issue for the default project where you have no roles

(because by default, you don't) by updating the schema to allow an empty

list.

This PR contains a number of small updates to the dashboard schemas,

including rewording descriptions, changing numbers to integers, setting

minimum values.

This property does not seem to be used anywhere, so we can remove it.

Can't find any references in code here or in enterprise. Let's try it

and see if it breaks.

This PR updates the personal dashboard project endpoint to return owners

and roles. It also adds the impl for getting roles (via the access

store).

I'm filtering the roles for a project to only include project roles for

now, but we might wanna change this later.

Tests and UI update will follow.

This PR is part 1 of returning project owners and your project roles for

the personal dashboard single-project endpoint.

It moves the responsibility of adding owners and roles to the project to

the service from the controller and adds a new method to the project

owners read model to take care of it.

I'll add roles and tests in follow-up PRs.

Splitting #8271 into smaller pieces. This first PR will focus on making

access service handle empty string inputs gracefully and converting them

to null before inserting them into the database.

This PR hooks up the owners and admins of Unleash to the UI. They'll

only be visible in cases where you have no projects.

In addition, it adds Orval schemas for the new payload properties and

updates the generating schemas to fix some minor typing issues.

This PR adds tests for the new admins property of the personal dashboard

API payload.

It checks that only user admins are added and that their image URL is

not an empty string. In doing this, also fixes an issue where the image

URL wouldn't be generated correctly.

## Discussion points

Some of the test feels like it might be better testing on a deeper level

(i.e. the account store). However, from an initial glance, I think that

would require more setup and work, so I'm leaving it in the dashboard

test for now as that's where it's ultimately useful. But we can discuss

if we should move it.

Ideally `feature_lifecycle_stage_entered{stage="archived"}` would allow

me to see how many flags are archived per week.

It seems like the numbers for this is a bit off, and wanted to extend

our current `feature_toggle_update` counter with action details.

Adds Unleash admins to the personal dashboard payload.

Uses the access store (and a new method) to fetch admins and maps it to

a new `MinimalUser` type. We already have a `User` class, but it

contains a lot of information we don't care about here, such as `isAPI`,

SCIM data etc.

In the UI, admins will be shown to users who are not part of any

projects. This is the default state for new viewer users, and can also

happen for editors if you archive the default project, for instance.

Tests in a follow-up PR

This PR adds all user-type owners of projects that you have access to to

the personal dashboard payload. It adds the new `projectOwners` property

regardless of whether you have access to any projects or not because it

required less code and fewer conditionals, but we can do the filtering

if we want to.

To add the owners, it uses the private project checker to get accessible

projects before passing those to the project owner read model, which has

a new method to fetch user owners for projects.

This trims role names before validation and subsequent validation.

This fixes a bug where you could have names that were empty or that

were duplicates of other names, but with leading or trailing

spaces. (They display the same in the UI).

This does not modify how we handle descriptions in the API. While the

UI form requires you to enter a description, the API does not. As

such, we can't make that required now without it being a breaking

change.

This change removes the flag used to anonymize project owners on the

way out. It was an issue in demo when we'd forgotten to configure the

email encryption. However, this issue has been resolved and we can

remove this check now.

This PR adds project owner information to the personal dashboard's

project payload.

To do so, it uses the existing project owners read model.

I've had to make a few changes to the project owners read model to

accomodate this:

- make the input type to `addOwners` more lenient. We only need the

project ids, so we can make that the only required property

- fall back to using email as the name if the user has no name or

username (such as if you sign up with the demo auth)

This PR adds some of the necessary project data to the personal

dashboard API: project names and ids, and the roles that the user has in

each of these projects.

I have not added project owners yet, as that would increase the

complexity a bit and I'd rather focus on that in a separate PR.

I have also not added projects you are part of through a group, though I

have added a placeholder test for that. I will address this in a

follow-up.

## About the changes

When trying to send messages longer than 3000 chars we get this error:

```

[ERROR] web-api:WebClient:0 failed to match all allowed schemas [json-pointer:/blocks/0/text]

[ERROR] web-api:WebClient:0 must be less than 3001 characters [json-pointer:/blocks/0/text/text]

[2024-09-23T10:10:15.676] [WARN] addon/slack-app - All (1) Slack client calls failed with the following errors: A platform error occurred: {"ok":false,"error":"invalid_blocks","errors":["failed to match all allowed schemas [json-pointer:/blocks/0/text]","must be less than 3001 characters [json-pointer:/blocks/0/text/text]"],"response_metadata":{"messages":["[ERROR] failed to match all allowed schemas [json-pointer:/blocks/0/text]","[ERROR] must be less than 3001 characters [json-pointer:/blocks/0/text/text]"],"scopes":["incoming-webhook","users:read","channels:read","groups:read","mpim:read","im:read","users:read.email","chat:write"],"acceptedScopes":["chat:write"]}}

```

This PR trims the text length to 3000 chars.

We also upgrade slack API due to some security fixes:

https://github.com/slackapi/node-slack-sdk/releases/tag/%40slack%2Fweb-api%407.3.4

After checking the migration guide to v7 it seems that none of the

breaking changes affect us:

https://github.com/slackapi/node-slack-sdk/wiki/Migration-Guide-for-web%E2%80%90api-v7

## Testing

I did manual test this integration and the fix. The way to reproduce is

adding a very long strategy name and sending that as an update on Slack:

Now the event succeeds and we notice on the integration event log that

the message was trimmed:

---------

Co-authored-by: Nuno Góis <github@nunogois.com>

https://linear.app/unleash/issue/2-2664/implement-event-tooltips

Implements event tooltips in the new event timeline.

This leverages our current `feature-event-formatter-md` to provide both

a label and a summary of the event. Whenever our new `eventTimeline`

flag is enabled, we enrich our events in our event search endpoint with

this information. We've discussed different options here and reached the

conclusion that this is the best path forward for now. This way we are

being consistent, DRY, relatively performant and it also gives us a

happy path forward if we decide to scope in the event log revamp, since

this data will already be present there.

We also added a new `label` property to each of our event types

currently in our event formatter. This way we can have a concise,

human-readable name for each event type, instead of exposing the

internal event type string.

~~We also fixed the way the event formatter handled bold text (as in,

**bold**). Before, it was wrapping them in *single asterisks*, but now

we're using **double asterisks**. We also abstracted this away into a

helper method aptly named `bold`. Of course, this change meant that a

bunch of snapshots and tests needed to be updated.~~

~~This new `bold` method also makes it super easy to revert this

decision if we choose to, for any reason. However I believe we should

stick with markdown formatting, since it is the most commonly supported

formatting syntax, so I see this as an important fix. It's also in the

name of the formatter (`md`). I also believe bold was the original

intent. If we want italic formatting we should implement it separately

at a later point.~~

Edit: It was _bold_ of me to assume this would work out of the box on

Slack. It does when you manually try it on the app, but not when using

the Slack client. See: https://github.com/Unleash/unleash/pull/8222

We now have customers that exceed INT capacity, so we need to change

this to BIGINT in client_metrics_env_variants_daily as well.

Even heavy users only have about 10000 rows here, so should be a quick

enough operation.

https://linear.app/unleash/issue/2-2658/create-eventtimeline-feature-flag

Adds a new `eventTimeline` feature flag for the new event timeline

feature.

I think `eventTimeline` is an appropriate name given the feature

description and the way it is evolving, but I'm open to suggestions.

~~This also assumes that this feature will target OSS.~~ Confirmed that

this will be a premium feature.

## Background

In #6380 we fixed a privilege escalation bug that allowed members of a

project that had permission to add users to the project with roles that

had a higher permission set than themselves. The PR linked essentially

constricts you only be able to assign users to roles that you possess

yourself if you are not an Admin or Project owner.

This fix broke expectations for another customer who needed to have a

project owner without the DELETE_PROJECT permission. The fix above made

it so that their custom project owner role only was able to assign users

to the project with the role that they posessed.

## Fix

Instead of looking directly at which role the role granter has, this PR

addresses the issue by making the assessment based on the permission

sets of the user and the roles to be granted. If the granter has all the

permissions of the role being granted, the granter is permitted to

assign the role.

## Other considerations

The endpoint to get roles was changed in this PR. It previously only

retrieved the roles that the user had in the project. This no-longer

makes sense because the user should be able to see other project roles

than the one they themselves hold when assigning users to the project.

The drawback of returning all project roles is that there may be a

project role in the list that the user does not have access to assign,

because they do not hold all the permissions required of the role. This

was discussed internally and we decided that it's an acceptable

trade-off for now because the complexities of returning a role list

based on comparing permissions set is not trivial. We would have to

retrieve each project role with permissions from the database, and run

the same in-memory check against the users permission to determine which

roles to return from this endpoint. Instead we opted for returning all

project roles and display an error if you try to assign a role that you

do not have access to.

## Follow up

When this is merged, there's no longer need for the frontend logic that

filters out roles in the role assignment form. I deliberately left this

out of the scope for this PR because I couldn't wrap my head around

everything that was going on there and I thought it was better to pair

on this with @chriswk or @nunogois in order to make sure we get this

right as the logic for this filtering seemed quite complex and was

touching multiple different components.

---------

Co-authored-by: Fredrik Strand Oseberg <fredrikstrandoseberg@Fredrik-sin-MacBook-Pro.local>

This appears to have been an oversight in the original implementation

of this endpoint. This seems to be the primary point of this

permission. Additionally, the docs mention that this permission should

allow you to do just that.

Note: I've not added any tests for this, because we don't typically add

tests for it. If we have an example to follow, I'd be very happy to add

it, though

https://github.com/Unleash/unleash/pull/7795 mistakenly included a

mention that these environment variables can be used:

- `UNLEASH_DEFAULT_ADMIN_NAME`

- `UNLEASH_DEFAULT_ADMIN_EMAIL`

However that's not really the case, since we decided to remove their

respective implementation before merging that PR.

https://linear.app/unleash/issue/2-2592/updateimprove-a-segment-via-api-call

Related to https://github.com/Unleash/unleash/issues/7987

This does not make the endpoint necessarily better - It's still a PUT

that acts as a PUT in some ways (expects specific required fields to be

present, resets the project to `null` if it's not included in the body)

and a PATCH in others (ignores most fields if they're not included in

the body). We need to have a more in-depth discussion about developing

long-term strategies for our API and respective OpenAPI spec.

However this at least includes the proper schema for the request body,

which is slightly better than before.

Clearing onboarding tables, because the data is invalid and we want to

start tracking all of this for only new customers.

This migration must be applied after the new logic is implemented.

We are observing incorrect data in Prometheus, which is consistently

non-reproducible. After a restart, the issue does not occur, but if the

pods run for an extended period, they seem to enter a strange state

where the counters become entangled and start sharing arbitrary values

that are added to the counters.

For example, the `feature_lifecycle_stage_entered` counter gets an

arbitrary value, such as 12, added when `inc()` is called. The

`exceedsLimitErrorCounter` shows the same behavior, and the code

implementation is identical.

We also tested some existing `increase()` counters, and they do not

suffer from this issue.

All calls to `counter.labels(labels).inc(`) will be replaced by

`counter.increment()` to try to mitigate the issue.

Previously we expected the tag to look like `type:value`. Now we allow

everything after first colon, as the value and not break query

`type:this:still:is:value`.

Fixes a bug where if you had API keys using different casing for the

same type, they'd come out as different types in the API token count

map. To get around it, we normalize the keys to lowercase before

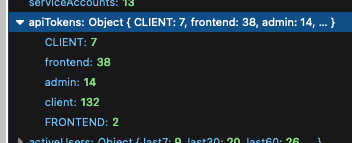

inserting them into the map, taking into account any previous values

that might have existed for that type.

Should fix issues like this:

Updates the instance stats endpoint with

- maxEnvironmentStrategies

- maxConstraints

- maxConstraintValues

It adds the following rows to the front end table:

- segments (already in the payload, just not used for the table before)

- API tokens (separate rows for type, + one for total) (also existed

before, but wasn't listed)

- Highest number of strategies used for a single flag in a single

environment

- Highest number of constraints used on a single strategy

- Highest number of values used for a single constraint

Turns out we've been trying to return API token data in instance stats

for a while, but that the serialization has failed. Serializing a JS map

just yields an empty object.

This PR fixes that serialization and also adds API tokens to the

instance stats schema (it wasn't before, but we did return it). Adding

it to the schema is also part of making resource usage visible as part

of the soft limits project.

Path types in our openapi are inferred as string (which is a sensible

default). But we can be more specific and provide the right type for

each parameter. This is one example of how we can do that

This PR fixes an issue where the number of flags belonging to a project

was wrong in the new getProjectsForAdminUi.

The cause was that we now join with the events table to get the most

"lastUpdatedAt" data. This meant that we got multiple rows for each

flag, so we counted the same flag multiple times. The fix was to use a

"distinct".

Additionally, I used this as an excuse to write some more tests that I'd

been thinking about. And in doing so also uncovered another bug that

would only ever surface in verrry rare conditions: if a flag had been

created in project A, but moved to project B AND the

feature-project-change event hadn't fired correctly, project B's last

updated could show data from that feature in project A.

I've also taken the liberty of doing a little bit of cleanup.

## About the changes

When storing last seen metrics we no longer validate at insert time that

the feature exists. Instead, there's a job cleaning up on a regular

interval.

Metrics for features with more than 255 characters, makes the whole

batch to fail, resulting in metrics being lost.

This PR helps mitigate the issue while also logs long name feature names

Implements empty responses for the fake project read model. Instead of

throwing a not implemented error, we'll return empty results.

This makes some of the tests in enterprise pass.

This PR touches up a few small things in the project read model.

Fixes:

Use the right method name in the query/method timer for

`getProjectsForAdminUi`. I'd forgotten to change the timer name from the

original method name.

Spells the method name correctly for the `getMembersCount` timer (it

used to be `getMemberCount`, but the method is callled `getMembersCount`

with a plural s).

Changes:

Call the `getMembersCount` timer from within the `getMembersCount`

method itself. Instead of setting that timer up from two different

places, we can call it in the method we're timing. This wasn't a problem

previously, because the method was only called from a single place.

Assuming we always wanna time that query, it makes more sense to put the

timing in the actual method.

Hooks up the new project read model and updates the existing project

service to use it instead when the flag is on.

In doing:

- creates a composition root for the read model

- includes it in IUnleashStores

- updates some existing methods to accept either the old or the new

model

- updates the OpenAPI schema to deprecate the old properties

These are both related to the work on the project list improvements

project.

The `projectListImprovements` flag will be used to enable disable the

new project list improvements.

The `useProjectReadModel` flag will be used to enable/disable the use

of the new project read model and is mostly a safety feature.

Creates a new project read model exposing data to be used for the UI and

for the insights module.

The model contains two public methods, both based on the project store's

`getProjectsWithCounts`:

- `getProjectsForAdminUi`

- `getProjectsForInsights`

This mirrors the two places where the base query is actually in use

today and adapts the query to those two explicit cases.

The new `getProjectsForAdminUi` method also contains data for last flag

update and last flag metric reported, as required for the new projects

list screen.

Additionally the read model contains a private `getMembersCount` method,

which is also lifted from the project store. This method was only used

in the old `getProjectsWithCounts` method, so I have also removed the

method from the public interface.

This PR does *not* hook up the new read model to anything or delete any

existing uses of the old method.

## Why?

As mentioned in the background, this query is used in two places, both

to get data for the UI (directly or indirectly). This is consistent with

the principles laid out in our [ADR on read vs write

models](https://docs.getunleash.io/contributing/ADRs/back-end/write-model-vs-read-models).

There is an argument to be made, however, that the insights module uses

this as an **internal** read model, but the description of an internal

model ("Internal read models are typically narrowly focused on answering

one question and usually require simple queries compared to external

read models") does not apply here. It's closer to the description of

external read models: "View model will typically join data across a few

DB tables" for display in the UI.

## Discussion points

### What about properties on the schema that are now gone?

The `project-schema`, which is delivered to the UI through the

`getProjects` endpoint (and nowhere else, it seems), describes

properties that will no longer be sent to the front end, including

`defaultStickiness`, `avgTimeToProduction`, and more. Can we just stop

sending them or is that a breaking change?

The schema does not define them as required properties, so in theory,

not sending them isn't breaking any contracts. We can deprecate the

properties and just not populate them anymore.

At least that's my thought on it. I'm open to hearing other views.

### Can we add the properties in fewer lines of code?

Yes! The [first commit in this PR

(b7534bfa)](b7534bfa07)

adds the two new properties in 8 lines of code.

However, this comes at the cost of diluting the `getProjectsWithCounts`

method further by adding more properties that are not used by the

insights module. That said, that might be a worthwhile tradeoff.

## Background

_(More [details in internal slack

thread](https://unleash-internal.slack.com/archives/C046LV6HH6W/p1723716675436829))_

I noticed that the project store's `getProjectWithCounts` is used in

exactly two places:

1. In the project service method which maps directly to the project

controller (in both OSS and enterprise).

2. In the insights service in enterprise.

In the case of the controller, that’s the termination point. I’d guess

that when written, the store only served the purpose of showing data to

the UI.

In the event of the insights service, the data is mapped in

getProjectStats.

But I was a little surprised that they were sharing the same query, so I

decided to dig a little deeper to see what we’re actually using and what

we’re not (including the potential new columns). Here’s what I found.

Of the 14 already existing properties, insights use only 7 and the

project list UI uses only 10 (though the schema mentions all 14 (as far

as I could tell from scouring the code base)). Additionally, there’s two

properties that I couldn’t find any evidence of being used by either:

- default stickiness

- updatedAt (this is when the project was last updated; not its flags)

During adding privateProjectsChecker, I saw that events composition root

is not used almost at all.

Refactored code so we do not call new EventService anymore.

<!-- Thanks for creating a PR! To make it easier for reviewers and

everyone else to understand what your changes relate to, please add some

relevant content to the headings below. Feel free to ignore or delete

sections that you don't think are relevant. Thank you! ❤️ -->

## About the changes

When reading feature env strategies and there's no segments it returns

empty list of segments now. Previously it was undefined leading to

overly verbose change request diffs.

<img width="669" alt="Screenshot 2024-08-14 at 16 06 14"

src="https://github.com/user-attachments/assets/1ac6121b-1d6c-48c6-b4ce-3f26c53c6694">

### Important files

<!-- PRs can contain a lot of changes, but not all changes are equally

important. Where should a reviewer start looking to get an overview of

the changes? Are any files particularly important? -->

## Discussion points

<!-- Anything about the PR you'd like to discuss before it gets merged?

Got any questions or doubts? -->

https://linear.app/unleash/issue/2-2518/figure-out-how-to-create-the-initial-admin-user-in-unleash

The logic around `initAdminUser` that was introduced in

https://github.com/Unleash/unleash/pull/4927 confused me a bit. I wrote

new tests with what I assume are our expectations for this feature and

refactored the code accordingly, but would like someone to confirm that

it makes sense to them as well.

The logic was split into 2 different methods: one to get the initial

invite link, and another to send a welcome email. Now these two methods

are more granular than the previous alternative and can be used

independently of creating a new user.

---------

Co-authored-by: Gastón Fournier <gaston@getunleash.io>

For easy gitar integration, we need to have boolean in the event

payload.

We might rethink it when we add variants, but currently enabled with

variants is not used.

Changes the event search handling, so that searching by user uses the

user's ID, not the "createdBy" name in the event. This aligns better

with what the OpenAPI schema describes it.

After adding an index, the time for the new event search on 100k events

decreased from 5000ms to 4ms. This improvement is due to the query using

an index scan instead of a sequence scan.

Encountered this case after encrypting an already long email address.

This should mitigate the issue in demo instance. I don't think it's a

big issue to ignore the length when validating an email address cause

this is already limited at the DB layer by the column length

Adds an endpoint to return all event creators.

An interesting point is that it does not return the user object, but

just created_by as a string. This is because we do not store user IDs

for events, as they are not strictly bound to a user object, but rather

a historical user with the name X.

Previously people were able to send random data to feature type. Now it

is validated.

Fixes https://github.com/Unleash/unleash/issues/7751

---------

Co-authored-by: Thomas Heartman <thomas@getunleash.io>

Changed the url of event search to search/events to align with

search/features. With that created a search controller to keep all

searches under there.

Added first test.

This PR adds Grafana gauges for all the existing resource limits. The

primary purpose is to be able to use this in alerting. Secondarily, we

can also use it to get better insights into how many customers have

increased their limits, as well as how many people are approaching their

limit, regdardless of whether it's been increased or not.

## Discussion points

### Implementation

The first approach I took (in

87528b4c67),

was to add a new gauge for each resource limit. However, there's a lot

of boilerplate for it.

I thought doing it like this (the current implementation) would make it

easier. We should still be able to use the labelName to collate this in

Grafana, as far as I understand? As a bonus, we'd automatically get new

resource limits when we add them to the schema.

``` tsx

const resourceLimit = createGauge({

name: 'resource_limit',

help: 'The maximum number of resources allowed.',

labelNames: ['resource'],

});

// ...

for (const [resource, limit] of Object.entries(config.resourceLimits)) {

resourceLimit.labels({ resource }).set(limit);

}

```

That way, when checking the stats, we should be able to do something

like this:

``` promql

resource_limit{resource="constraintValues"}

```

### Do we need to reset gauges?

I noticed that we reset gauges before setting values in them all over

the place. I don't know if that's necessary. I'd like to get that double

clarified before merging this.

https://linear.app/unleash/issue/2-2501/adapt-origin-middleware-to-stop-logging-ui-requests-and-start

This adapts the new origin middleware to stop logging UI requests (too

noisy) and adds new Prometheus metrics.

<img width="745" alt="image"

src="https://github.com/user-attachments/assets/d0c7f51d-feb6-4ff5-b856-77661be3b5a9">

This should allow us to better analyze this data. If we see a lot of API

requests, we can dive into the logs for that instance and check the

logged data, like the user agent.

This PR adds some helper methods to make listening and emitting metric

events more strict in terms of types. I think it's a positive change

aligned with our scouting principle, but if you think it's complex and

does not belong here I'm happy with dropping it.

Add ability to format format event as Markdown in generic webhooks,

similar to Datadog integration.

Closes https://github.com/Unleash/unleash/issues/7646

Co-authored-by: Nuno Góis <github@nunogois.com>

https://linear.app/unleash/issue/2-2469/keep-the-latest-event-for-each-integration-configuration

This makes it so we keep the latest event for each integration

configuration, along with the previous logic of keeping the latest 100

events of the last 2 hours.

This should be a cheap nice-to-have, since now we can always know what

the latest integration event looked like for each integration

configuration. This will tie-in nicely with the next task of making the

latest integration event state visible in the integration card.

Also improved the clarity of the auto-deletion explanation in the modal.

This PR adds the UI part of feature flag collaborators. Collaborators are hidden on windows smaller than size XL because we're not sure how to deal with them in those cases yet.

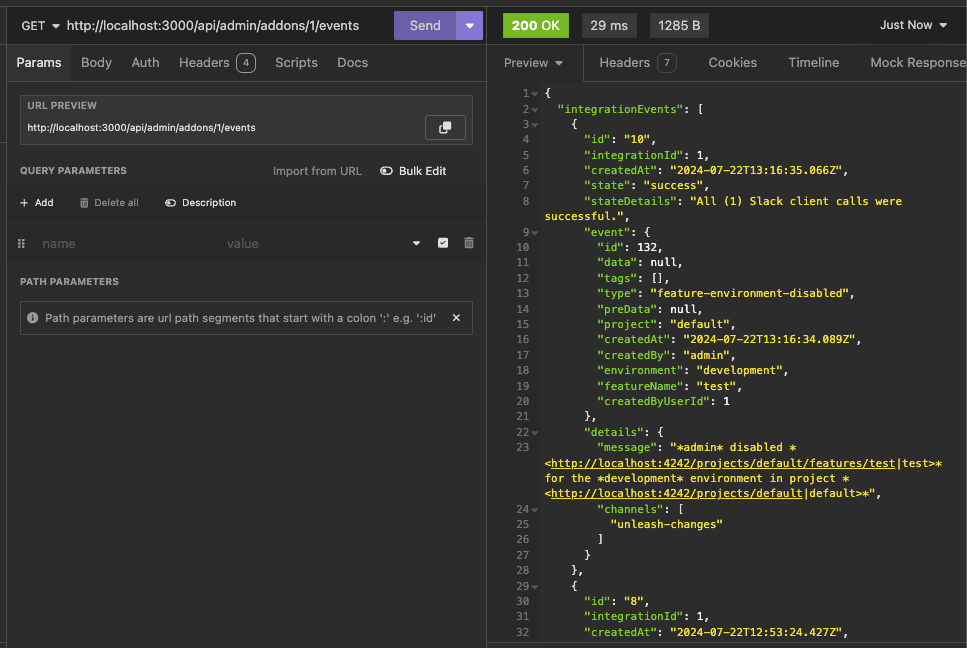

https://linear.app/unleash/issue/2-2439/create-new-integration-events-endpointhttps://linear.app/unleash/issue/2-2436/create-new-integration-event-openapi-schemas

This adds a new `/events` endpoint to the Addons API, allowing us to

fetch integration events for a specific integration configuration id.

Also includes:

- `IntegrationEventsSchema`: New schema to represent the response object

of the list of integration events;

- `yarn schema:update`: New `package.json` script to update the OpenAPI

spec file;

- `BasePaginationParameters`: This is copied from Enterprise. After

merging this we should be able to refactor Enterprise to use this one

instead of the one it has, so we don't repeat ourselves;

We're also now correctly representing the BIGSERIAL as BigInt (string +

pattern) in our OpenAPI schema. Otherwise our validation would complain,

since we're saying it's a number in the schema but in fact returning a

string.

This PR allows you to gradually lower constraint values, even if they're

above the limits.

It does, however, come with a few caveats because of how Unleash deals

with constraints:

Constraints are just json blobs. They have no IDs or other

distinguishing features. Because of this, we can't compare the current

and previous state of a specific constraint.

What we can do instead, is to allow you to lower the amount of

constraint values if and only if the number of constraints hasn't

changed. In this case, we assume that you also haven't reordered the

constraints (not possible from the UI today). That way, we can compare

constraint values between updated and existing constraints based on

their index in the constraint list.

It's not foolproof, but it's a workaround that you can use. There's a

few edge cases that pop up, but that I don't think it's worth trying to

cover:

Case: If you **both** have too many constraints **and** too many

constraint values

Result: You won't be allowed to lower the amount of constraints as long

as the amount of strategy values is still above the limit.

Workaround: First, lower the amount of constraint values until you're

under the limit and then lower constraints. OR, set the constraint you

want to delete to a constraint that is trivially true (e.g. `currentTime

> yesterday` ). That will essentially take that constraint out of the

equation, achieving the same end result.

Case: You re-order constraints and at least one of them has too many

values

Result: You won't be allowed to (except for in the edge case where the

one with too many values doesn't move or switches places with another

one with the exact same amount of values).

Workaround: We don't need one. The order of constraints has no effect on

the evaluation.

https://linear.app/unleash/issue/2-2450/register-integration-events-webhook

Registers integration events in the **Webhook** integration.

Even though this touches a lot of files, most of it is preparation for

the next steps. The only actual implementation of registering

integration events is in the **Webhook** integration. The rest will

follow on separate PRs.

Here's an example of how this looks like in the database table:

```json

{

"id": 7,

"integration_id": 2,

"created_at": "2024-07-18T18:11:11.376348+01:00",

"state": "failed",

"state_details": "Webhook request failed with status code: ECONNREFUSED",

"event": {

"id": 130,

"data": null,

"tags": [],

"type": "feature-environment-enabled",

"preData": null,

"project": "default",

"createdAt": "2024-07-18T17:11:10.821Z",

"createdBy": "admin",

"environment": "development",

"featureName": "test",

"createdByUserId": 1

},

"details": {

"url": "http://localhost:1337",

"body": "{ \"id\": 130, \"type\": \"feature-environment-enabled\", \"createdBy\": \"admin\", \"createdAt\": \"2024-07-18T17: 11: 10.821Z\", \"createdByUserId\": 1, \"data\": null, \"preData\": null, \"tags\": [], \"featureName\": \"test\", \"project\": \"default\", \"environment\": \"development\" }"

}

}

```

This PR updates the limit validation for constraint numbers on a single

strategy. In cases where you're already above the limit, it allows you

to still update the strategy as long as you don't add any **new**

constraints (that is: the number of constraints doesn't increase).

A discussion point: I've only tested this with unit tests of the method

directly. I haven't tested that the right parameters are passed in from

calling functions. The main reason being that that would involve

updating the fake strategy and feature stores to sync their flag lists

(or just checking that the thrown error isn't a limit exceeded error),

because right now the fake strategy store throws an error when it

doesn't find the flag I want to update.

https://linear.app/unleash/issue/2-2453/validate-patched-data-against-schema

This adds schema validation to patched data, fixing potential issues of

patching data to an invalid state.

This can be easily reproduced by patching a strategy constraints to be

an object (invalid), instead of an array (valid):

```sh

curl -X 'PATCH' \

'http://localhost:4242/api/admin/projects/default/features/test/environments/development/strategies/8cb3fec6-c40a-45f7-8be0-138c5aaa5263' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '[

{

"path": "/constraints",

"op": "replace",

"from": "/constraints",

"value": {}

}

]'

```

Unleash will accept this because there's no validation that the patched

data actually looks like a proper strategy, and we'll start seeing

Unleash errors due to the invalid state.

This PR adapts some of our existing logic in the way we handle

validation errors to support any dynamic object. This way we can perform

schema validation with any object and still get the benefits of our

existing validation error handling.

This PR also takes the liberty to expose the full instancePath as

propertyName, instead of only the path's last section. We believe this

has more upsides than downsides, especially now that we support the

validation of any type of object.

This PR adds prometheus metrics for when users attempt to exceed the

limits for a given resource.

The implementation sets up a second function exported from the

ExceedsLimitError file that records metrics and then throws the error.

This could also be a static method on the class, but I'm not sure that'd

be better.

This PR updates the OpenAPI error converter to also work for errors with

query parameters.

We previously only sent the body of the request along with the error,

which meant that query parameter errors would show up incorrectly.

For instance given a query param with the date format and the invalid

value `01-2020-01`, you'd previously get the message:

> The `from` value must match format "date". You sent undefined

With this change, you'll get this instead:

> The `from` value must match format "date". You sent "01-2020-01".

The important changes here are two things:

- passing both request body and query params

- the 3 lines in `fromOpenApiValidationError` that check where we should

get the value you sent from.

The rest of it is primarily updating tests to send the right arguments

and some slight rewording to more accurately reflect that this can be

either request body or query params.

https://linear.app/unleash/issue/2-2435/create-migration-for-a-new-integration-events-table

Adds a DB migration that creates the `integration_events` table:

- `id`: Auto-incrementing primary key;

- `integration_id`: The id of the respective integration (i.e.

integration configuration);

- `created_at`: Date of insertion;

- `state`: Integration event state, as text. Can be anything we'd like,

but I'm thinking this will be something like:

- Success ✅

- Failed ❌

- SuccessWithErrors ⚠️

- `state_details`: Expands on the previous column with more details, as

text. Examples:

- OK. Status code: 200

- Status code: 429 - Rate limit reached

- No access token provided

- `event`: The whole event object, stored as a JSON blob;

- `details`: JSON blob with details about the integration execution.

Will depend on the integration itself, but for example:

- Webhook: Request body

- Slack App: Message text and an array with all the channels we're

posting to

I think this gives us enough flexibility to cover all present and

(possibly) future integrations, but I'd like to hear your thoughts.

I'm also really torn on what to call this table:

- `integration_events`: Consistent with the feature name. Addons are now

called integrations, so this would be consistent with the new thing;

- `addon_events`: Consistent with the existing `addons` table.

Our CSP reports that unsafe-inline is not recommended for styleSrc. This

PR adds a flag for making it possible to remove this element of our CSP

headers. It should allow us to see what (if anything) breaks hard.

We'll store hashes for the last 5 passwords, fetch them all for the user

wanting to change their password, and make sure the password does not

verify against any of the 5 stored hashes.

Includes some password-related UI/UX improvements and refactors. Also

some fixes related to reset password rate limiting (instead of an

unhandled exception), and token expiration on error.

---------

Co-authored-by: Nuno Góis <github@nunogois.com>

PR #7519 introduced the pattern of using `createApiTokenService` instead

of newing it up. This usage was introduced in a concurrent PR (#7503),

so we're just cleaning up and making the usage consistent.

Deletes API tokens bound to specific projects when the last project they're mapped to is deleted.

---------

Co-authored-by: Tymoteusz Czech <2625371+Tymek@users.noreply.github.com>

Co-authored-by: Thomas Heartman <thomas@getunleash.io>

If you have SDK tokens scoped to projects that are deleted, you should

not get access to any flags with those.

---------

Co-authored-by: David Leek <david@getunleash.io>

This PR adds a feature flag limit to Unleash. It's set up to be

overridden in Enterprise, where we turn the limit up.

I've also fixed a couple bugs in the fake feature flag store.

This adds an extended metrics format to the metrics ingested by Unleash

and sent by running SDKs in the wild. Notably, we don't store this

information anywhere new in this PR, this is just streamed out to

Victoria metrics - the point of this project is insight, not analysis.

Two things to look out for in this PR:

- I've chosen to take extend the registration event and also send that

when we receive metrics. This means that the new data is received on

startup and on heartbeat. This takes us in the direction of collapsing

these two calls into one at a later point

- I've wrapped the existing metrics events in some "type safety", it

ain't much because we have 0 type safety on the event emitter so this

also has some if checks that look funny in TS that actually check if the

data shape is correct. Existing tests that check this are more or less

preserved

This PR adds the back end for API token resource limits.

It adds the limit to the schema and checks the limit in the service.

## Discussion points

The PAT service uses a different service and different store entirely,

so I have not included testing any edge cases where PATs are included.

However, that could be seen as "knowing too much". We could add tests

that check both of the stores in tandem, but I think it's overkill for

now.

This PR updates the Unleash UI to use the new environment limit.

As it turns out, we already had an environment limit in the UI, but it

was hardcoded (luckily, its value is the same as the new default value

🥳).

In addition to the existing places this limit was used, it also disables

the "new environment" button if you've reached the limit. Because this

limit already exists, I don't think we need a flag for it. The only

change is that you can't click a button (that should be a link!) that

takes you to a page you can't do anything on.

This PR adds limits for environments to the resource limit schema. The

actual limiting will have to be done in Enterprise, however, so this is

just laying the groundwork.

This fixes the issue where project names that are 100 characters long

or longer would cause the project creation to fail. This is because

the resulting ID would be longer than the 100 character limit imposed

by the back end.

We solve this by capping the project ID to 90 characters, which leaves

us with 10 characters for the suffix, meaning you can have 1 billion

projects (999,999,999 + 1) that start with the same 90

characters (after slugification) before anything breaks.

It's a little shorter than what it strictly has to be (we could

probably get around with 95 characters), but at this point, you're

reaching into edge case territory anyway, and I'd rather have a little

too much wiggle room here.